Brane: The User Guide

This book is still in development. Everything is currently subject to change.

Welcome to the user guide for the Brane infrastructure!

In this book, we document and outline how to use Brane, a programmable application orchestration framework, from a user perspective.

If you want to know more about Brane before you begin, checkout the overview chapter. Otherwise, we recommend you to read the Before you read chapter. It explains how this book is structured, and also goes through some need-to-know terminology.

Attribution

The icons used in this book (![]() ,

, ![]() ,

, ![]() ) are provided by Flaticon.

) are provided by Flaticon.

Overview

In this chapter, we will provide a brief overview of what the framework is, how it is build and what kind of features it supports.

It is not, however, a complete, technical description of its implementation; for that, we recommend you read our other book.

Brane: Programmable Orchestration of Applications and Networking

Regardless of the context and rationale, running distributed applications on geographically dispersed IT resources often comes with various technical and organizational challenges. If not addressed appropriately, these challenges may impede development, and in turn, scientific and business innovation. We have designed and developed Brane to support implementers in addressing these challenges. Brane makes use of containerization to encapsulate functionalities as portable building blocks. Through programmability, application orchestration can be expressed using intuitive domain-specific languages. As a result, end-users with limited or no programming experience are empowered to compose applications by themselves, without having to deal with the underlying technical details.

In context of the EPI project, Brane is extended to orchestrate data distribution as well. Because the project concerns itself with health data, this orchestration does not just include distributing the data, but also policing access and making sure that applications adhere to both global and local data access policies. The same applies to the network orchestration of Brane; here, too, we have to make sure that secure and policy-appliant networking between different sites is possible and automated by Brane.

The framework in a nutshell

Concretely, the Brane framework is primarily designed to take an application in the form of a workflow and perform the work specified in it over multiple nodes spread over multiple domains, to which we refer as compute sites. This basic idea is shown in figure 1.

An important design feature of Brane is that it tries to be intuitive in use for different roles that users have when developing a workflow. We identify three: system engineers, who build and manage the compute sites; software engineers, who implement compute steps or algorithms; and scientists, who use the algorithms to write workflows that implement their research.

Since Brane's intergration into the EPI project, there is also a fourth role: that of policy makers, who define and write the policies that are related to data handling.

Finally, there is also a fifth, "hidden" role: the Brane administrators, who manage the framework itself.

This separation of concerns means that the framework provides different levels of abstraction to interact with it, where each of these levels are designed to be familiar to the users who will use it.

For system engineers, the framework hosts a number of tools and configuration files that allow them to setup and specify their infrastructures; software engineers can write software in any language they like, and then package that using a script-like domain-specific language (BraneScript); policy makers can define the policies in an already existing reasoner language called eFLINT; and for scientists, the framework provides a natural language-like domain-specific language to write the workflows (Bakery), so their work is easily shareable with scientists who do not have extensive Brane knowledge.

Before you read

As discussed in the overview chapter, the Brane framework is aimed at different kind of users, each of them with their own role within the framework. This split of the framework in terms of roles is referred to as the separation of concerns.

The four roles that this book focusses on are:

- system engineers, who are in charge of one of the compute sites that Brane abstracts over. They have to prepare their site for the framework and discuss its integration with the infrastructure managers.

- software engineers, who write the majority of the software used in the Brane framework. This software will be distributed in the form of packages.

- policy makers, who define and write the policies that are relevant to the framework. These are both data-level policies, which describe who can access what data and how; and network-level policies, which describe where the data can be send on the infrastructure and what kind of security measures are needed when that happens.

- scientists, who orchestrate different packages into a workflow, which eventually implements the computation needed for their research.

To this end, the book itself is split into four groups of chapters, one for each of the roles in the separation of concerns.

Terminology

Before you can begin, there is also some extra terminology that will be used throughout this book and that is useful to know here.

The Brane instance

Looked at from the highest level of abstraction, Brane has a client part (in the form of a command-line tool or a Jupyter notebook) and a server part. The first is referred to as a Brane client, while the latter is referred to as the Brane instance.

Where next

To continue reading, we suggest you start at the first chapter for your role. You can select it in the sidebar to the left.

If you are part of the fifth, "hidden" role (the Brane administrators), you have your own book; we recommend you continue there. It also details how to obtain, compile and run the framework for testing purposes.

Introduction

In this series of chapters, we will discuss the role of system administrators and how they may prepare their system for Brane. The chapters will talk about what the requirements are on their system and what kind of information they are expected to share with the Brane instance. Finally, we will also discuss defining datasets.

To know more about the inner workings of Brane, we recommend you checkout the Brane: A Specification book. That details the framework's inner workings.

Background & Terminology

The Brane instance defines a control node (or central node), which is where the orchestrator itself and associated services run. This node is run by the Brane administrators. Then, as a counterpart to this control node, there is the worker plane, which is composed of all the different compute sites that Brane orchestrates over. Each such compute site is referred to as a domain, a location or, since Brane treats them as a single entity, a worker node. Multiple worker nodes may exist per physical domain (e.g., a single hospital can have multiple domains for different tasks), but Brane will treat these as conceptually different places.

Within the framework, a system administrator is someone who acts as the 'technical owner' of a certain worker node. They are the ones who can make sure their system is prepared and meets the Brane requirements, and who defines the security requirements of any operation of the framework on their system. They are also the ones who make any data technically available that is published from their domain. And although policies are typically handled by policy writers, another role in the framework, in practise, this can be the same person as the system administrator.

The Central node

For every Brane instance, there is typically only one control node. Even if multiple VMs are used, the framework expects it to behave like a single node; this is due to the centralized nature of it.

The control node consists of the following few services:

- The driver service is, as the name suggests, the driving service behing a control node. It takes incoming workflows submitted by scientists, and starts executing them, emitting jobs that need to be executed on the worker nodes.

- The planner service takes incoming workflows submitted to the driver service and plans them. This is simply the act of defining which worker node will execute which task, and takes into account available resources on each of the domains, as well as policies that determine if a domain can actually transfer data or execute the job.

- The registry service (sometimes called central registry service or API service for disambiguation) is the centralized version of the local registry services (see below). It acts as a centralized database for the framework, which provides information about which dataset is located where, which domains are participating and where to find them1, and in addition hosts a central package repository.

- Finally, the proxy service acts as a gateway between the other services and the outside world to enable proxying (i.e., it does not accept proxied requests, but rather creates them). In addition, it is also the point that handles server certificates and parses client certificates for identifications.

For more details, check the specification.

Note that, if you need any compute to happen on the central node, this cannot be done through the central node itself; instead, setup a worker node alongside the central node to emulate the same behaviour.

The Worker node

As specified, a domain typically hosts a worker node. This worker node collectively describes both a local control part of the framework, referred to as the framework delegate, and some computing backend that actually executes the jobs. In this section, we provide a brief overview of both.

The delegate itself consists of a few services. Their exact working is detailled in the specification, but as a brief overview:

- The delegate service is the main service on the delegate, and takes incoming job requests and will attempt to schedule them. This is also the service that directly connects to the compute backend (see below). You can think of it as a local driver service.

- The registry service (sometimes called local registry service for disambiguation) keeps track of the locally available datasets and intermediate results (see the data tutorial for Software Engineers or the data tutorial for Scientists for more information) and acts as a point from where the rest of the framework downloads them.

- The checker service acts as the Policy Enforcement Point (PEP) for the framework. It hosts a reasoner, typically eFLINT, and is queried by both the delegate and registry services to see if operations are allowed.

- Finally, the local node also has proxy service, just like the central node.

As for the compute backend, Brane is designed to connect to different types. An overview:

- A local backend schedules new jobs on the same Docker engine where the control plane of Brane runs. This is the simplest infrastructure of them all, and requires no other preparation than required when installing the control plane. This is typically the choice of backend when the worker node is running on a single server or VM.

- A Kubernetes backend connects to a Kubernetes cluster on which incoming jobs are hosted. This is the recommended large-scale compute option if you need large amounts of compute power, since Kubernetes is designed to natively work with containers.

More information on each backend and how to set it up is discussed in the backends chapter(s).

Next

To start setting up your own worker node, we recommend checking out the installation chapters. These will walk you through everything you need to setup a node, both control nodes and worker nodes.

For information on setting up different backends, check the backend chapters.

Alternatively, if you are looking for extensive documentation on the Brane configuration files relevant to a worker node, checkout the documentation chapters.

Introduction

In these chapters, we will walk you through installing a node in the Brane instance.

There are three types of nodes: a central node (or control node), a worker node and a proxy node (see the generic introduction chapter for more information). These series of chapters will discuss how to install both of them.

First, for any kind of node, you should start by downloading the dependencies on the VM where your worker node will run. Then, install the branectl executable, which will help you in setting up and managing your node.

You can then go into the specifics for each kind of node. You can either setup a control node, worker node or a proxy node.

Dependencies

The first step to install any piece of software is to install its dependencies.

The next section will discuss the runtime dependencies. If you plan to compile the framework instead of downloading the prebuilt executables, you must install both the dependencies in the Runtime dependencies- and Compilation dependencies sections.

Runtime dependencies

In all Brane node types, the Brane services are implemented as containers, which means that the number of runtime dependencies is relatively few.

However, the following dependencies are required:

- You have to install Docker to run the container services. To install, follow one of the following links: Ubuntu, Debian, Arch Linux or macOS (note the difference between Ubuntu and Debian; they use different keys and repositories).

-

If you are running Docker on Linux, it is extremely convenient to set it up such that no root is required:

sudo usermod -aG docker "$USER" Don't forget to log in and -out again after running the above command to make the new changes effective.

Don't forget to log in and -out again after running the above command to make the new changes effective. This effectively gives power to all non-root users that are part of the

This effectively gives power to all non-root users that are part of the docker-group to modify any file as if they had root access. Be careful who you include in this group.

-

- Install the BuildKit plugin for Docker:

If these instructions don't work for you, you can also check the plugin's repository README for more installation methods.# Clone the repo, CD into it and install the plugin # NOTE: You will need to install 'make' # (check https://github.com/docker/buildx for alternative methods if that fails) git clone https://github.com/docker/buildx.git && cd buildx make install # Set the plugin as the default builder docker buildx install # Switch to the buildx driver docker buildx create --use Docker Buildx is included by default in most distributions of Docker noawadays. You can just run the

Docker Buildx is included by default in most distributions of Docker noawadays. You can just run the docker buildx installanddocker buildx create --usefunctions first, and if they work, skip the top ones. - Install OpenSSL for the

branectlexecutable:- Ubuntu / Debian:

sudo apt-get install openssl - Arch Linux:

sudo pacman -Syu openssl - macOS:

# We assume you installed Homebrew (https://brew.sh/) brew install openssl

- Ubuntu / Debian:

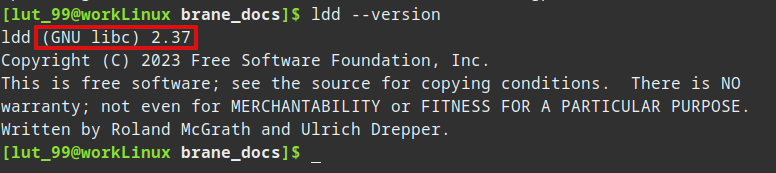

Aside from that, you have to make sure that your system can run executables compiled against GLIBC 2.27 or higher. You can verify this by running:

ldd --version

The top line of the rest will show you the GLIBC version installed on your machine:

If you do not meet this requirement, you will have to compile branectl (and any other non-containerized binaries) yourself on a machine with that version of GLIBC installed or lower. In that case, also install the compilation dependencies.

Next

Congratulations, you have prepared your machine for running (or compiling) a Brane instance! In the next chapter, we will discuss installing the invaluable node management tool branectl. After that, depending on which node you want to setup, you can follow the guide for installing control nodes or worker nodes.

branectl

Your best friend for managing a Brane node is the Brane server Command-Line Tool, or branectl (do not confuse with the user tool, brane or the Brane CLI).

This chapter concerns itself with installing branectl itself. Make sure that you have followed the previous chapter to install the necessary dependencies before you begin.

Precompiled binary

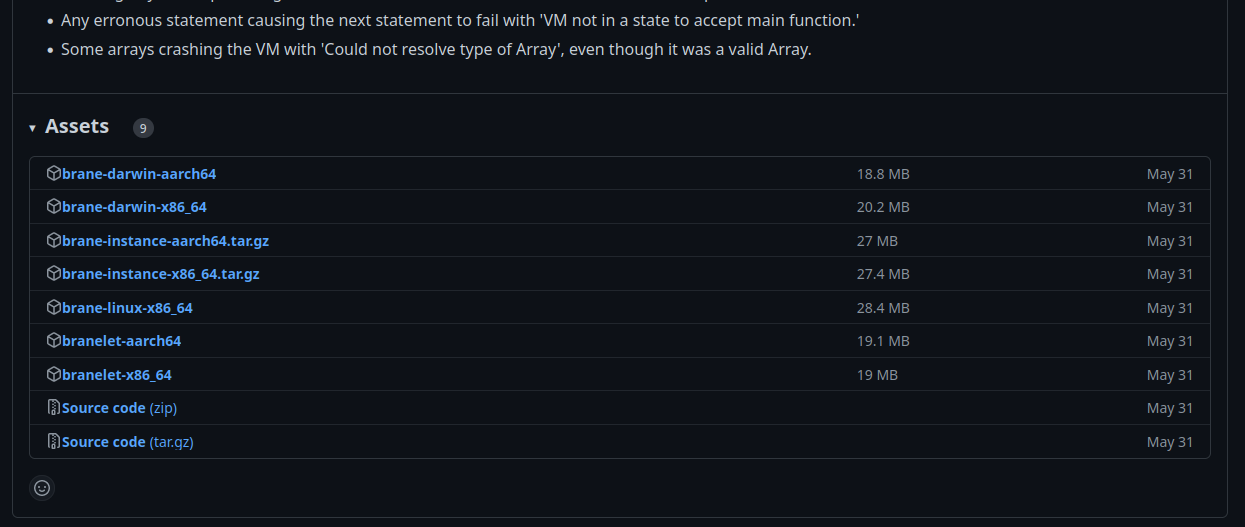

In most cases, it's the easiest to download the precompiled binary from the GitHub repository.

To download it, you can simply go to the repository (https://github.com/epi-project/brane) and navigate to 'tags'. From there, you can select your desired release and choose it from among the list. Alternatively, you can also go to the latest release by clicking this link: https://github.com/epi-project/brane/releases/latest.

Note that

branectlwas only introduced in version 1.0.0, so any version before that will not have a downloadablebranectlexecutable (or any compatible one, for that matter).

Once downloaded, it's highly recommended to move the executable to a location in your PATH (for example, /usr/local/bin). You can do so by running:

sudo mv ./branectl /usr/local/bin/branectl

if you are in the folder where you downloaded the tool.

Alternatively, you can also download the latest version using curl from the command-line:

# For Linux (x86-64)

sudo curl -Lo /usr/local/bin/branectl https://github.com/epi-project/brane/releases/latest/download/branectl-linux-x86_64

# For macOS (Intel)

sudo curl -Lo /usr/local/bin/branectl https://github.com/epi-project/brane/releases/latest/download/branectl-darwin-x86_64

# For macOS (M1/M2)

sudo curl -Lo /usr/local/bin/branectl https://github.com/epi-project/brane/releases/latest/download/branectl-darwin-aarch64

Don't forget to make the executable runnable:

sudo chmod +x /usr/local/bin/branectl

Compile it yourself

Sometimes, though, the executable provided on the repository doesn't suit your needs. This is typically the case if you need a cutting-edge version that isn't released, you have an uncommon OS or processor architecture or an incompatible GLIBC version.

To compile the binary, refer to the compilation instructions over at the Brane: A Specification-book for instructions.

Next

If you can now run branectl --help without errors, congratulations! You have successfully installed the management tool for the Brane instance.

You can now choose what kind of node to install. To install a central node, go to the next chapter; go to the chapter after that to install a worker node; or go the the final chapter to setup a proxy node.

Control node

Before you follow the steps in this chapter, we assume you have installed the required dependencies and installed branectl, as discussed in the previous two chapters.

If you did, then you are ready to install the control node. This chapter will explain you how to do that.

Obtaining images

Just as with branectl itself, there are two ways of obtaining the Docker images and related resources: downloading them from the repository or compiling them. Note, however, that multiple files should be downloaded; and to aid with this, the branectl executable can be used to automate the downloading process for you.

In the future, a third option might be to download the standard images from DockerHub. However, due to the experimental nature of the framework, the images are not yet published. Instead, rely on

branectlto make the process easy for you.

Downloading prebuilt images

The recommended way to download the Brane images is to use branectl. These will download the images to .tar files, which can be send around at your leisure; and, if you will be deploying the framework on a device where internet is limited or restricted, you can also use it to download Brane's auxillary images (ScyllaDB).

Run the following command to download the Brane services themselves:

# Download the images

branectl download services central -f

And to download the auxillary images (run in addition to the previous command):

branectl download services auxillary -f

(the -f will automatically create missing directories for the target output path)

Once these complete successfully, you should have the images for the control node in the directory target/release. While this path may be changed, it is recommended to stick to the default to make the commands in subsequent sections easier.

By default,

branectlwill download the version for which it was compiled. However, you can change this with the--versionoption:# You should change this on all download commands branectl download services central --version 1.0.0Note, however, that not every Brane version may have the same services or the same method of downloading, and so this option may fail. Download the

branectlfor the desired version instead for a more reliable experience.

Compiling the images

The other way to obtain the images is to compile them yourself. If you want to do so, refer to the compilation instructions over at the Brane: A Specification-book for instructions.

Generating configuration

Once you have downloaded the images, it is time to setup the configuration files for the node. These files determine the type of node, as well as any of the node's properties and network specifications.

For a control node, this means generating the following files:

- An infrastructure file (

infra.yml), which will determine the worker nodes available in the instance; - A proxy file (

proxy.yml), which describes if any proxying should occur and how; and - A node file (

node.yml), which will contain the node-specific configuration like service names, ports, file locations, etc.

All of these can be generated with branectl for convenience.

First, we generate the infra.yml file. This can be done using the following command:

branectl generate infra <ID>:<ADDR> ...

Here, multiple <ID>:<ADDR> pairs can be given, one per worker node that is available to the instance. In such a pair, the <ID> is the location ID of that domain (which must be the same as indicated in that node; see the chapter for setting up worker nodes), and the <ADDR> is the address (IP or hostname) where that domain is available.

For example, suppose that we want to instantiate a central node for a Brane instance with two worker nodes: one called amy, at amy-worker-node.com, and one called bob, at 192.0.2.2. We would generate an infra.yml as follows:

branectl generate infra -f -p ./config/infra.yml amy:amy-worker-node.com bob:192.0.2.2

Running this command will generate the file ./config/infra.yml for you, with default settings for each domain. If you want to change these, you can simply use more options and flags in the tool itself (see the branectl documentation or the builtin branectl generate infra --help), or change the file manually (see the infra.yml documentation).

While the

-fflag (fix missing directories) and the-poption (path of generated file) are not required, you will typically use these to make your life easier down the road. See thebranectl generate nodecommand below to find out why.

Next, we will generate the proxy.yml file. Typically, this configuration can be left to the default settings, and so the following command will do the trick in most situations:

branectl generate proxy -f -p ./config/proxy.yml

A proxy.yml file should be available in ./config/proxy.yml after running this command.

The contents of this file will typically only differ if you have advanced networking requirements. If so, consult the branectl documentation or the builtin branectl generate proxy --help, or the proxy.yml documentation.

This file may be skipped if you are setting up an external proxy node for this node. See the chapter on proxy nodes for more information.

Then we will generate the final file, the node.yml file. This file is done last, because it itself defines where the BRANE software may find any of the other configuration files.

When generating this file, it is possible to manually specify where to find each of those files. However, in practise, it is more convenient to make sure that the files are at the default locations that the tools expects. The following tree structure displays the default locations for the configuration of a central node:

<current dir>

├ config

│ ├ certs

│ │ └ <domain certs>

│ ├ infra.yml

│ └ proxy.yml

└ node.yml

The config/certs directory will be used to store the certificates for each of the domains; we will do that in the following section.

Assuming that you have the files stored as above, the following command can be used to create a node.yml for a central node:

branectl generate node -f central <HOSTNAME>

Here, <HOSTNAME> is the address where any worker node may reach the central node. Only the hostname will suffice (e.g., some-domain.com), but any scheme or path you supply will be automatically stripped away.

The -f flag will make sure that any of the missing directories (e.g., config/certs) will be generated automatically.

Once again, you can change many of the properties in the node.yml file by specifying additional command-line options (see the branectl documentation or the builtin branectl generate node --help) or by changing the file manually (see the node.yml documentation).

Due to a bug in one of the framework's dependencies, it cannot handle certificates on IP addresses. To workaround this issue, the

-Hoption is provided; it can be used to specify a certain hostname/IP mapping for this node only. Example:# We can address '192.0.2.2' with 'bob-domain' now branectl generate node -f -H bob-domain:192.0.2.2 central central-domain.comNote that this is local to this domain only; you have to specify this on other nodes as well. For more information, see the

node.ymldocumentation.

Since the above is highly localized, it can be abused to do node-specific routing, by assigning the same hostname to different IPs on different machines. Definitely entering "hacky" territory here, though...

Adding certificates

Before the framework can be fully used, the central node will need the public certificates of the worker nodes to be able to verify their identity during connection. Since we assume Brane may be running in a decentralized and shielded environment, the easiest is to add the domain's certificates to the config/certs directory.

To do so, obtain the public certificate of each of the workers in your instance. Then, navigate to the config/certs directory (or wherever you pointed it to in node.yml), and do the following for each certificate:

- Create a directory with that domain's name (for the example above, you would create a directory named

amyfor that domain) - Move the certificate to that folder and call it

ca.pem.

At runtime, the Brane services will look for the peer domain's identity by looking up the folder with their name in it. Thus, make sure that every worker in your system has a name that you filesystem can represent.

Launching the instance

Finally, now that you have the images and the configuration files, it's time to start the instance.

We assume that you have installed your images to target/release. If you have built your images in development mode, however, they will be in target/debug; see the box below for the command then.

This can be done with one branectl command:

branectl start central

This will launch the services in the local Docker daemon, which completes the setup!

The command above assumes default locations for the images (

./target/release) and for thenode.ymlfile (./node.yml). If you use non-default locations, however, you can use the following flags:

- Use

-nor--nodeto specify another location for thenode.ymlfile:It will define the rest of the configuration locations.branectl -n <PATH TO NODE YML> start central- If you have installed all images to another folder than

./target/release(e.g.,./target/debug), you can use the quick option--image-dirto change the folders. Specifically:branectl start --image-dir "./target/debug" central- If you want to use pre-downloaded image for the auxillary services (

aux-scylla) that are in the same folder as the one indicated by--image-dir, you can specify--local-auxto use the folder version instead:branectl start central --local-aux- You can also specify the location of each image individually. To see how, refer to the

branectldocumentation or the builtinbranectl start --help.

Note that the Scylla database this command launches might need a minute to come online, even though its container already reports ready. Thus, before you can use your instance, wait until

docker psshows all Brane containers running (in particular thebrane-apiservice will crash until the Scylla service is done). You can usewatch docker psif you don't want to re-call the command yourself.

Next

Congratulations, you have configured and setup a Brane control node!

Depending on which domains you are in charge of, you may also have to setup one or more worker nodes or proxy nodes. Note, though, that these are written to be used on their own, so parts of it overlap with this chapter.

Otherwise, you can move on to other work! If you want to test your instance like a normal user, you can go to the documentation for Software Engineers or Scientists.

Worker node

Before you follow the steps in this chapter, we assume you have installed the required dependencies and installed branectl, as discussed in the previous two chapters.

If you did, then you are ready to install a worker node. This chapter will explain you how to do that.

Obtaining images

Just as with branectl itself, there are two ways of obtaining the Docker images: downloading them from the repository or compiling them. Note, however, that multiple files should be downloaded; and to aid with this, the branectl executable can be used to automate the downloading process for you.

In the future, a third option might be to download the standard images from DockerHub. However, due to the experimental nature of the framework, the images are not yet published. Instead, rely on

branectlto make the process easy for you.

Downloading prebuilt images

The recommended way to download the Brane images is to use branectl. These will download the images to .tar files, which can be send around at your leisure.

Run the following command to download the Brane service images for a worker node:

# Download the images

branectl download services worker -f

(the -f will automatically create missing directories for the target output path)

Once these complete successfully, you should have the images for the worker node in the directory target/release. While this path may be changed, it is recommended to stick to the default to make the commands in subsequent sections easier.

By default,

branectlwill download the version for which it was compiled. However, you can change this with the--versionoption:branectl download services worker -f --version 1.0.0Note, however, that not every Brane version may have the same services or the same method of downloading, and so this option may fail. Download the

branectlfor the desired version instead for a more reliable experience.

Compiling the images

The other way to obtain the images is to compile them yourself. If you want to do so, refer to the compilation instructions over at the Brane: A Specification-book for instructions.

Generating configuration

Once you have downloaded the images, it is time to setup the configuration files for the node. These files determine the type of node, as well as any of the node's properties and network specifications.

For a worker node, this means generating the following files:

- A backend file (

backend.yml), which will define how the worker node connects to which backend that will actually execute the tasks; - A proxy file (

proxy.yml), which describes if any proxying should occur and how; - A policy secret for the deliberation API (

policy_deliberation_secret.json), which contains the private key for accessing the Brane-side ofbrane-chk; - A policy secret for the policy expert API (

policy_expert_secret.json), which contains the private key for accessing the management-side ofbrane-chk; - A policy database (

polocies.db), which is the persistent storage forbrane-chk's policies; and - A node file (

node.yml), which will contain the node-specific configuration like service names, ports, file locations, etc.

All of these can be generated with branectl for convenience.

We will first generate a backend.yml file. This will define how the worker node can connect to the infrastructure that will actually execute incoming containers. Multiple backend types are possible (see the series of chapters on it), but by default, the configuration assumes that work will be executed on the local machine's Docker daemon.

Thus, to generate such a backend.yml file, you can use the following command:

branectl generate backend -f -p ./config/backend.yml local

Running this command will generate the file ./config/backend.yml for you, with default settings for how to connect to the local daemon. If you want to change these, you can simply use more options and flags in the tool itself (see the branectl documentation or the builtin branectl generate backend --help), or change the file manually (see the backend.yml documentation).

While the

-fflag (--fix-dirs, fix missing directories) and the-poption (--path, path of generated file) are not required, you will typically use these to make your life easier down the road. See thebranectl generate nodecommand below to find out why.

Next up is the proxy.yml file. Typically, these can be left to the default settings, and so the following command will do the trick in most situations:

branectl generate proxy -f -p ./config/proxy.yml

A proxy.yml file should be available in ./config/proxy.yml after running this command.

The contents of this file will typically only differ if you have advanced networking requirements. If so, consult the branectl documentation or the builtin branectl generate proxy --help, or the proxy.yml documentation.

This file may be skipped if you are setting up an external proxy node for this node. See the chapter on proxy nodes for more information.

Next, we will generate the policy keys. To do so, run the following two commands:

branectl generate policy_secret -f -p ./config/policy_deliberation_secret.json

branectl generate policy_secret -f -p ./config/policy_expert_secret.json

The default settings should suffice. If not, check branectl generate policy_secret --help for more information.

Then, we will generate the policy database. This is not a configuration file, but does need to be bootstrapped and explicitly passed to the node's brane-chk service. To generate it, run:

branectl generate policy_db -f -p ./policies.db

Finally, we will generate the node.yml file. This file is done last, because it itself defines where BRANE software may find any of the others.

When generating this file, it is possible to manually specify where to find each of those files. However, in practise, it is more convenient to make sure that the files are at the default locations that the tools expects. The following tree structure displays the default locations for the configuration of a worker node:

<current dir>

├ config

│ ├ certs

│ │ └ <domain certs>

│ ├ backend.yml

│ ├ policy_deliberation_secret.yml

│ ├ policy_expert_secret.yml

│ └ proxy.yml

├ policies.db

└ node.yml

The config/certs directory will be used to store the certificates for this worker node and any node it wants to download data from. We will do that in the following section.

Assuming that you have the other configuration files stored at their default locations, the following command can be used to create a node.yml for a worker node:

branectl generate node -f worker <HOSTNAME> <LOCATION_ID>

Here, the <HOSTNAME> is the address where any worker node may reach the central node. Only the hostname will suffice (e.g., some-domain.com), but any scheme or path you supply will be automatically stripped away. Then, the <LOCATION_ID> is the identifier that the system will use for your location. Accordingly, it must be unique in the instance, and you must choose the same one as defined in the central node of the instance.

The -f flag will make sure that any of the missing directories (e.g., config/certs) will be generated automatically.

For example, we can generate a node.yml file for a worker with the identifier bob:

branectl generate node -f worker 192.0.2.2 bob

Once again, you can change many of the properties in the node.yml file by specifying additional command-line options (see the branectl documentation or the builtin branectl generate node --help) or by changing the file manually (see the node.yml documentation).

Due to a bug in one of the framework's dependencies, it cannot handle certificates on IP addresses. To workaround this issue, the

-Hoption is provided; it can be used to specify a certain hostname/IP mapping for this node only. Example:# We can address '192.0.2.2' with 'some-domain' now branectl generate node -f -H some-domain:192.0.2.2 worker bob-domain.com bobNote that this is local to this domain only; you have to specify this on other nodes as well. For more information, see the

node.ymldocumentation.

Generating certificates

In contrast to setting up a control node, a worker node will have to strongly identify itself to prove to other worker nodes who it is. This is relevant, because worker nodes may want to download data from one another; and if this dataset is private, then the other domains likely won't share it unless they know who they are talking to.

In Brane, the identity of domains is proven by the use of X.509 certificates. Thus, before you can start your worker node, we will have to generate some certificates.

Server-side certificates

Every worker node is required to have at least a certificate authority (CA) certificate and a server certificate. The first is used as the "authority" of the domain, which is used to sign other certificates such that the worker can see that it has been signed by itself in the past. The latter, in contrast, is used to provide the identity of the worker in case it plays the role of a server (some other domain connects to us and requests a dataset).

Once again, we can use the power of branectl to generate both of these certificates for us. Use the following command to generate both a certificate autority and server certificate:

branectl generate certs -f -p ./config/certs server <LOCATION_ID> -H <HOSTNAME>

where <LOCATION_ID> is the identifier of the worker node (the one configured in the node.yml file), and <HOSTNAME> is the hostname that other domains can connect to this domain to.

You can omit the -H <HOSTNAME> flag to default the hostname to be the same as the <LOCATION_ID>. This is useful where you've given manual host mappings when generating the node.yml file (i.e., the -H option there).

For example, to generate certificates for the domain amy that lives at amy-worker-node.com:

branectl generate certs -f -p ./config/certs server amy -H amy-worker-node.com

This should generate multiple files in the ./config/certs directory, chief of which are ca.pem and server.pem.

Certificate generation is done using cfssl, which is dynamically downloaded by

branectl. The checksum of the downloaded file is asserted, and if you ever see a checksum-related error, then you might be dealing with a fake binary that is being downloaded under a real address. In that case, tread with care.

When the certificates are generated, be sure to share ca.pem with the central node. If you are also adminstrating that node, see here for instructions on what to do with it.

Client-side certificates

The previous certificates only authenticate a server to a client; not the other way around. That is where the client certificates come into play.

The power of client certificates come from the fact that they are signed using the certificate authority of the domain to which they want to authenticate. In other words, the domain has to "approve" that a certain user exists by creating a certificate for them, and then sending it over.

Note, however, that currently, Brane does not use any hostnames or IPs embedded in the client certificate. This means that anyone with the client certificate can obtain access to the domain as if they were the user for which it was issued. Treat the certificates with care, and be sure that the client is also careful with the certificate.

If a certificate is leaked or compromised, don't worry; the certificate only proves the identity of a user. What kind of rights that user has can be separately determined (see the chapter series for policy experts), and so you can simply withdraw any rights that user has when it happens.

To generate a client certificate, its easiest to navigate to the ./config/certs directory where you generate the server certificates. Then, you can run:

branectl generate certs client <LOCATION_ID> -H <HOSTNAME> -f -p ./client-certs

Note, that the <LOCATION_ID> is now the ID of the worker for which you are generating the certificate, and <HOSTNAME> is their address. Similarly to server certificates, you can omit -H <HOSTNAME> to default to the <LOCATION_ID>.

Note the

-fand-poptions. These are optional, and work together to redirect the output of the commands to a nested folder calledclient-certs. This is however very recommendable, since running this command without that flag in the server certificates folder will accidentally clear theca.pemfile, rendering the rest of the certificates useless.

For example, contuining the example in the previous subsection, we now generate a client certificate for bob at bobs-emporium.com:

branectl generate certs client bob -H 192.0.2.2

Once the client certificates are generated, you can share the ca.pem and client-id.pem files with the client who intends to connect to this node.

Adding client certificates of other domains

If your worker node needs to download data from other worker nodes, you will have to add the client certificates they generated to your configuration.

The procedure to do so is identical as for central nodes. For every pair of a ca.pem and client-id.pem certificates you want to:

- Create a directory with that domain's name in the

certsdirectory (for the example, you would create a directory namedcerts/amyfor a domain namedamy) - Move the certificates to that folder.

At runtime, whenever your worker node will need to download a dataset from another worker, it will read the certificates in that worker's folder if they exist to authenticate itself.

Writing policies

Before you launch the instance, you may want to change the node's policy. If not, then the default policy kicks in; which is deny all.

To change which policies are active, the policy experts needs access tokens to authorize themselves. You can generate these by running:

branectl generate policy_token <INITIATOR> <SYSTEM> <DURATION> -s <PATH_TO_SECRET>

where:

<INITIATOR>is the name of the policy expert (or some other identifier);<SYSTEM>is some identifier for the system that acts on their behalf. Typically, this would be the identifier of the domain they are working for.<DURATION>is the duration for which the token is valid. You should give it as numbers, withsfor seconds,mfor minutes,dfor days oryfor years (e.g.,31d).<PATH_TO_SECRET>is the path to the relevant policy secret you generated earlier.

Note that the command writes the token to ./policy_token.json, unless you change the path with -p. You can then share this token with the policy expert.

More information to manage policies can be found in the policy expert's documentation.

Launching the instance

Finally, now that you have the images and the configuration files, it's time to start the instance.

We assume that you have installed your images to target/release. If you have built your images in development mode, however, they will be in target/debug; see the box below for the command then.

This can be done with one branectl command:

branectl start worker

This will launch the services in the local Docker daemon, which completes the setup!

The command above assumes default locations for the images (

./target/release) and for thenode.ymlfile (./node.yml). If you use non-default locations, however, you can use the following flags:

- Use

-nor--nodeto specify another location for thenode.ymlfile:It will define the rest of the configuration locations.branectl -n <PATH TO NODE YML> start worker- If you have installed all images to another folder than

./target/release(e.g.,./target/debug), you can use the quick option--image-dirto change the folders. Specifically:branectl start --image-dir "./target/debug" worker- You can also specify the location of each image individually. To see how, refer to the

branectldocumentation or the builtinbranectl start --help.

Next

Congratulations, you have configured and setup a Brane worker node!

If you are in charge of more worker nodes, you can repeat the steps in this chapter to add more. If you are also charged with setting up a control node, you can check the previous chapter for control-node specific instructions.

Alternatively, you can also see if a proxy node is something for your use-case in the next chapter.

Otherwise, you can move on to other work! If you want to test your node like a normal user, you can go to the documentation for Software Engineers or Scientists.

Proxy node

Before you follow the steps in this chapter, we assume you have installed the required dependencies and installed branectl, as discussed in the previous two chapters.

If you did, then you are ready to install a proxy node. This chapter will explain you how to do that.

Obtaining images

Just as with branectl itself, there are two ways of obtaining the Docker images: downloading them from the repository or compiling them. Note, however, that multiple files should be downloaded; and to aid with this, the branectl executable can be used to automate the downloading process for you.

In the future, a third option might be to download the standard images from DockerHub. However, due to the experimental nature of the framework, the images are not yet published. Instead, rely on

branectlto make the process easy for you.

Downloading prebuilt images

The recommended way to download the Brane images is to use branectl. These will download the images to .tar files, which can be send around at your leisure.

Run the following command to download the Brane service images for a worker node:

# Download the images

branectl download services proxy -f

(the -f will automatically create missing directories for the target output path)

Once these complete successfully, you should have the images for the worker node in the directory target/release. While this path may be changed, it is recommended to stick to the default to make the commands in subsequent sections easier.

By default,

branectlwill download the version for which it was compiled. However, you can change this with the--versionoption:branectl download services proxy -f --version 1.0.0Note, however, that not every Brane version may have the same services or the same method of downloading, and so this option may fail. Download the

branectlfor the desired version instead for a more reliable experience.

Compiling the images

The other way to obtain the images is to compile them yourself. If you want to do so, refer to the compilation instructions over at the Brane: A Specification-book for instructions.

Generating configuration

Once you have downloaded the images, it is time to setup the configuration files for the node. These files determine the type of node, as well as any of the node's properties and network specifications.

For a worker node, this means generating the following files:

- A proxy file (

proxy.yml), which describes if any proxying should occur and how; and - A node file (

node.yml), which will contain the node-specific configuration like service names, ports, file locations, etc.

All of these can be generated with branectl for convenience.

We first generate the proxy.yml file. Typically, these can be left to the default settings, and so the following command will do the trick in most situations:

branectl generate proxy -f -p ./config/proxy.yml

A proxy.yml file should be available in ./config/proxy.yml after running this command.

The contents of this file will typically only differ if you have advanced networking requirements. If so, consult the branectl documentation or the builtin branectl generate proxy --help, or the proxy.yml documentation.

While the

-fflag (--fix-dirs, fix missing directories) and the-poption (--path, path of generated file) are not required, you will typically use these to make your life easier down the road. See thebranectl generate nodecommand below to find out why.

Then we will generate the node.yml file. This file is done last, because it itself defines where Brane software may find any of the others.

When generating this file, it is possible to manually specify where to find each of those files. However, in practise, it is more convenient to make sure that the files are at the default locations that the tools expects. The following tree structure displays the default locations for the configuration of a proxy node:

<current dir>

├ config

│ ├ certs

│ │ └ <domain certs>

│ └ proxy.yml

└ node.yml

The config/certs directory will be used to store the certificates for this proxy node and any node it wants to download data from. We will do that in the following section.

Assuming that you have the other configuration files stored at their default locations, the following command can be used to create a node.yml for a proxy node:

branectl generate node -f proxy <HOSTNAME>

Here, the <HOSTNAME> is the address where any other node may reach the proxy node. Only the hostname will suffice (e.g., some-domain.com), but any scheme or path you supply will be automatically stripped away.

The -f flag will make sure that any of the missing directories (e.g., config/certs) will be generated automatically.

For example, we can generate a node.yml file for a proxy found at 192.0.2.2:

branectl generate node -f proxy 192.0.2.2

Once again, you can change many of the properties in the node.yml file by specifying additional command-line options (see the branectl documentation or the builtin branectl generate node --help) or by changing the file manually (see the node.yml documentation).

Due to a bug in one of the framework's dependencies, it cannot handle certificates on IP addresses. To workaround this issue, the

-Hoption is provided; it can be used to specify a certain hostname/IP mapping for this node only. Example:# We can address '192.0.2.2' with 'some-domain' now branectl generate node -f -H some-domain:192.0.2.2 proxy bob-domain.comNote that this is local to this domain only; you have to specify this on other nodes as well. For more information, see the

node.ymldocumentation.

Generating certificates

In contrast to setting up a control node, a proxy node will have to strongly identify itself to prove to other nodes who it is. This is relevant, because worker nodes may want to download data from one another through their proxy nodes; and if this dataset is private, then the other domains likely won't share it unless they know who they are talking to.

In Brane, the identity of domains is proven by the use of X.509 certificates. Thus, before you can start your proxy node, we will have to generate some certificates.

Server-side certificates

Every proxy node is required to have at least a certificate authority (CA) certificate and a server certificate. The first is used as the "authority" of the domain, which is used to sign other certificates such that the proxy can see that it has been signed by itself in the past. The latter, in contrast, is used to provide the identity of the proxy in case it plays the role of a server (some other domain connects to us and requests a dataset).

Once again, we can use the power of branectl to generate both of these certificates for us. Use the following command to generate both a certificate autority and server certificate:

branectl generate certs -f -p ./config/certs server <LOCATION_ID> -H <HOSTNAME>

where <LOCATION_ID> is the identifier of the proxy node (the one configured in the node.yml file), and <HOSTNAME> is the hostname that other domains can connect to this domain to.

You can omit the -H <HOSTNAME> flag to default the hostname to be the same as the <LOCATION_ID>. This is useful where you've given manual host mappings when generating the node.yml file (i.e., the -H option there).

For example, to generate certificates for the domain amy that lives at amy-proxy-node.com:

branectl generate certs -f -p ./config/certs server amy -H amy-proxy-node.com

This should generate multiple files in the ./config/certs directory, chief of which are ca.pem and server.pem.

Certificate generation is done using cfssl, which is dynamically downloaded by

branectl. The checksum of the downloaded file is asserted, and if you ever see a checksum-related error, then you might be dealing with a fake binary that is being downloaded under a real address. In that case, tread with care.

When the certificates are generated, be sure to share ca.pem with the central node. If you are also adminstrating that node, see here for instructions on what to do with it.

Client-side certificates

The previous certificates only authenticate a server to a client; not the other way around. That is where the client certificates come into play.

The power of client certificates come from the fact that they are signed using the certificate authority of the domain to which they want to authenticate. In other words, the domain has to "approve" that a certain user exists by creating a certificate for them, and then sending it over.

Note, however, that currently, Brane does not use any hostnames or IPs embedded in the client certificate. This means that anyone with the client certificate can obtain access to the domain as if they were the user for which it was issued. Treat the certificates with care, and be sure that the client is also careful with the certificate.

If a certificate is leaked or compromised, don't worry; the certificate only proves the identity of a user. What kind of rights that user has can be separately determined (see the chapter series for policy experts), and so you can simply withdraw any rights that user has when it happens.

To generate a client certificate, its easiest to navigate to the ./config/certs directory where you generate the server certificates. Then, you can run:

branectl generate certs client <LOCATION_ID> -H <HOSTNAME> -f -p ./client-certs

Note, that the <LOCATION_ID> is now the ID of the proxy for which you are generating the certificate, and <HOSTNAME> is their address. Similarly to server certificates, you can omit -H <HOSTNAME> to default to the <LOCATION_ID>.

Note the

-fand-poptions. These are optional, and work together to redirect the output of the commands to a nested folder calledclient-certs. This is however very recommendable, since running this command without that flag in the server certificates folder will accidentally clear theca.pemfile, rendering the rest of the certificates useless.

For example, contuining the example in the previous subsection, we now generate a client certificate for bob at bobs-emporium.com:

branectl generate certs client bob -H 192.0.2.2

Once the client certificates are generated, you can share the ca.pem and client-id.pem files with the client who intends to connect to this node.

Adding client certificates of other domains

If your proxy node needs to download data from other nodes, you will have to add the client certificates they generated to your configuration.

The procedure to do so is identical as for central nodes. For every pair of a ca.pem and client-id.pem certificates you want to:

- Create a directory with that domain's name in the

certsdirectory (for the example, you would create a directory namedcerts/amyfor a domain namedamy) - Move the certificates to that folder.

At runtime, whenever your proxy node will need to download a dataset from another node, it will read the certificates in that node's folder if they exist to authenticate itself.

Launching the instance

Finally, now that you have the images and the configuration files, it's time to start the instance.

We assume that you have installed your images to target/release. If you have built your images in development mode, however, they will be in target/debug; see the box below for the command then.

This can be done with one branectl command:

branectl start proxy

This will launch the services in the local Docker daemon, which completes the setup!

The command above assumes default locations for the images (

./target/release) and for thenode.ymlfile (./node.yml). If you use non-default locations, however, you can use the following flags:

- Use

-nor--nodeto specify another location for thenode.ymlfile:It will define the rest of the configuration locations.branectl -n <PATH TO NODE YML> start proxy- If you have installed all images to another folder than

./target/release(e.g.,./target/debug), you can use the quick option--image-dirto change the folders. Specifically:branectl start --image-dir "./target/debug" proxy- You can also specify the location of each image individually. To see how, refer to the

branectldocumentation or the builtinbranectl start --help.

Next

Congratulations, you have configured and setup a Brane proxy node!

If you are in charge of more proxy nodes, you can repeat the steps in this chapter to add more. If you are also charged with setting up a control node or worker node, you can check the control node chapter or the worker node chapter, respectively, for node specific instructions.

Otherwise, you can move on to other work! If you want to test your node like a normal user, you can go to the documentation for Software Engineers or Scientists.

Introduction

Welcome to the series of chapters detailing the policy expert role!

These chapters will discuss everything needed to successfully play this role, including technical knowledge, setup, and considerations when writing policy for Brane.

This chapter will first briefly outline the role as a whole. Then, the next-section gives pointers on where to start.

Policy experts

The role of a policy expert within Brane is to understand the restrictions and regulations that apply to compute infrastructure and datasets managed by a domain, and then to translate those into computable policy. By this we mean some kind of representation -e.g., declarative rules- that represent these regulations and that can be used by the system to automatically give access to users or not.

Policy experts walk the line between management and technology. They both need to understand the legal- and business side of sharing data and the technology in order to map from the first to the second successfully. Moreover, they need to be careful in how they design their reasoner in what information it divulges, as any policy information may be sensitive one way or another (e.g., private domain internal regulations, patient consent, etc).

In order to make this process as easy as possible, Brane allows domains to choose their own policy language, as long as this translates to either an allow/deny at the end of evaluation1. Currently, Brane uses eFLINT as the default language, but see the custom reasoning backend chapters for more information on how to implement custom languages.

Eventually, Brane will support a system where (parts of) policy are shared with other domains to drastically increase efficiency. For now, though, this is future work.

Next

To start learning about how to be a policy expert for Brane, start by installing the required tooling. Then, depending on whether your administrator asks you to use eFLINT or another language, either check the eFLINT introduction chapters, or documentation for the language implemented by your administrator. Additionally, you can check the docs for implementing a custom reasoning backend if more customization is needed.

Installation

As a policy expert, you will write policies and then manage them in the node's brane-chk-service.

To do the former, you need a development environment for the reasoner backend you will be using. See the Installing the eFLINT Interpreter-section to find out how to setup a local environment for eFLINT.

For the latter, you can choose between the Policy Reasoner GUI, a visual interface, or branectl, a command-line interface. Installing either of these is explained in Installing management tools-section.

Installing the eFLINT Interpreter

To develop and test your policies, it is recommended to have an offline environment available where you can iteratively test your policies as you construct them.

The most mature eFLINT interpreter is the Haskell implementation. This version is up-to-date with the most recent eFLINT edition, and supports a human-friendly REPL to examine and change a knowledge base for testing purposes.

To install it, download and install Haskell as described in the README of the project. In short, open a terminal and run:

# On Ubuntu

apt-get install cabal-install ghc

cabal update

Once installed, clone the repository with the interpreter and build it with cabal:

git clone https://gitlab.com/eflint/haskell-implementation ./eflint

cd eflint

cabal configure

cabal build

cabal install

After completion, you should be able to run the interactive eFLINT prompt by running:

eflint-repl

Use eflint-repl --help to see more options, or type :help when you are in the REPL.

Also consider adding syntax highlighting for your favourite code editor. There are syntax highlighters for Visual Studio Code and Sublime.

Installing management tools

To manage the local Brane node, you need to use a tool that can interface with the reasoner and push/pull policies, change the active policy and test drive your changes. Currently, there are two tools available that can do this:

- The official Policy Reasoner GUI, which provides a visual interface; and

- The Brane CTL management tool, which provides a terminal interface.

Installing the first is recommended in most cases, except when you're in need of a quick way to manage them (e.g., in scenarios where the system administrator also takes the role of the policy expert) or are more comfortable with terminals in general.

The Policy Reasoner GUI

The most up-to-date instructions for installing the policy reasoner GUI are described here. Below follows a summary for convenience.

First, clone the repository to your machine using Git:

git clone https://github.com/epi-project/policy-reasoner-gui && cd ./policy-reasoner-gui

Then, you can either install the GUI natively or in a Docker container.

For the former, install Rust (rustup is usually the easiest) and NPM. Then, open two terminals in the repository directory, and run

cd client

npx parcel

in one to launch the HTML client, and

cargo run

in the other to launch the client's backend.

For the latter, install Docker (macOS, Ubuntu, Debian or Arch Linux) (don't forget to enable sudoless access if you're on Linux). Then, run:

docker compose up -d

to build & run both the client and the client's backend.

The branectl management tool

To install the management tool, you can download the binary from the repository or compile it from scratch.

To download, you simply go to the release and download the branectl binary of your choice. There are options to download it for Linux (branectl-linux-x86_64), Intel Macs (branectl-darwin-x86_64) or M1/M2/M3 Macs (branectl-darwin-aarch64).

To compile the binary yourself, install GCC's gcc and g++, CMake and Rust (rustup is usually the easiest) first if you haven't already. Then, clone the repository and run the make.py script:

git clone https://github.com/epi-project/brane && cd ./brane

./make.py ctl

The resulting binary can be found under target/release/branectl.

Either way, it's nice if you add the binary to your PATH to make executing it easier. To do so, you can copy it to /usr/local/bin on Linux or macOS:

sudo cp <BINARY_PATH> /usr/local/bin/branectl

If you can execute branectl --help without problems, you know the installation succeeded.

Next

Now that you have the management client of your choice installed, move to the next chapter to learn how to use it.

You can also consult chapters on how to write eFLINT policies, if that's the language of your node, or else how to write new backends.

Managing policies

This chapter discusses how you can manage the policies in a running policy reasoner.

The first section focusses on obtaining access keys from your adminsitrator. Then, we discuss either managing the checker using the visual interface or using the terminal interface.

Acquiring keys

Before you begin, you need to have access to the reasoner in question. This is currently implemented as two JSON Web Tokens (JWTs): one is used to access the deliberation API of the checker, which is where Brane connects to check workflows; and the other is used to access the policy expert API of the checker, which is used to manage which policies are active. For full convenience, you should try to acquire both so you can test the checker yourself.

If your adminstrator is unsure how to do this (or you are the administrator), consult the relevant section of their part of the wiki.

Visual management (Policy Reasoner GUI)

Terminal management (branectl)

Next

Introduction

This chapter will be written soon.

Introduction

This chapter will be written soon.

The Policy File

This page is for the deprecated method of entering policies into the system using a

policies.ymlfile. A better method (involving eFLINT) is implemented through thepolicy-reasonerproject.

Brane used to read its policies from a so-called policy file (also known as policies.yml) which defines a very simplistic set of access-control policies.

Typically, there is one such policy file per domain, which instructs the "reasoner" for that domain what is should allow and what not.

In this chapter, we discuss how one might write such a policy file. In particular, we will discuss the general layout of the file, and then the two kinds of policies currently supported: user policies and container policies.

Overview

The policies.yml file is written in YAML for the time being.

It has two sections, each of them corresponding to a kind of policy (users and containers, respectively). Each section is then a simple list of rules. At runtime, the framework will consider the rules top-to-bottom, in order, to find the first rule that says something about the user/dataset pair or the container in question. A full list of available policies can be found below.

Before that, we will first describe the kinds of policies in some more detail in the following sections.

User policies

User policies concern themselves what a user may access, and then specifically, which dataset they may access. These policies thus always describe some kind of rule on a pair of a user (known by their ID) and a dataset (also known by its ID).

As a policy expert, you may assume that by the time your policy file is consulted, the framework has already verified the user's ID. As for datasets, your policies are only consulted when data is accessed on your own domain, and so you can also assume that dataset IDs used correspond to the desired dataset.

Note that which user IDs and dataset IDs to use should be done in cooperation with the system administrator of your domain. Currently, the framework doesn't provide a safe way of communicating which IDs are available to the policy file, so you will have to retrieve the up-to-date list of IDs the old-fashioned way.

Container policies

Container policies concern themselves with which container is allowed to be run at a certain domain. Right now, it would have seemed obvious that they are triplets of users, datasets and containers - but due to time constraints, they currently only feature a container hash (e.g., its ID) that says if they are allowed to be implemented or not.

Because the ID of a container is a SHA256-hash, you can safely assume that whatever container your referencing will actually reference that container with the properties you know of it. However, similarly to user policies, there is no list available in the framework itself of known container hashes; thus, this list must be obtained by asking the system's administrator or, maybe more relevant, a scientist who wants to run their container.

Policies

In this section, we describe the concrete policies and their syntax. Remember that policies are checked in-order for a matching rule, and that the framework will throw an error if no matching rule is found.

In general, there are two possible actions to be taken for a given request: allow it, in which case the framework proceeds, or deny it, in which case the framework aborts the request. For each of those action, though, there are multiple ways of matching a user/dataset pair or a container hash, which results in the different policies described below.

Syntax-wise, the policies are given as a vector of dictionaries, where each dictionary is a policy. Then, every such dictionary must always have the policy key, which denotes its type (see the two sections below). Any other key is policy-dependent.

User policies

The following policies are available for user/dataset pairs:

allow: Matches a specific user/dataset pair and allows it.user: The identifier of the user to match.data: The identifier of the dataset to match.

deny: Matches a specific user/dataset pair and denies it.user: The identifier of the user to match.data: The identifier of the dataset to match.

allow_user_all: Matches all datasets for the given user and allows them.user: The identifier of the user to match.

deny_user_all: Matches all datasets for the given user and denies them.user: The identifier of the user to match.

allow_all: Matches all user/dataset pairs and allows them.deny_all: Matches all user/dataset pairs and denies them.

Container policies

The following policies are available for containers:

allow: Matches a specific container hash and allows it.hash: The hash of the container to match.name(optional): A human-friendly name for the container (no effect on policy, but for debugging purposes).

deny: Matches a specific container hash and denies it.hash: The hash of the container to match.name(optional): A human-friendly name for the container (no effect on policy, but for debugging purposes).

allow_all: Matches all container hashes and allows them.deny_all: Matches all container hashes and denies them.

Example

The following snippet is an example policy file:

# The user policies

users:

# Allow the user 'Amy' to access the datasets 'A', 'B', but not 'C'

- policy: allow

user: Amy

data: A

- policy: allow

user: Amy

data: B

- policy: deny

user: Amy

data: C

# Specifically deny access to `Dan` to do anything

- policy: deny_user_all

user: Dan

# For any other case, we deny access

- policy: deny_all

# The container policies

containers:

# We allow the `hello_world` container to be run

- policy: allow

hash: "GViifYnz2586qk4n7fdyaJB7ykASVuptvZyOpRW3E7o="

name: hello_world

# But not the `cat` container

- policy: deny

hash: "W5WS23jAAtjatN6C5PQRb0JY3yktDpFHnzZBykx7fKg="

name: cat

# Any container not matched is allowed (bad practice, but to illustrate)

- policy: allow_all

Introduction

In these series of chapters, we will discuss how you can develop and then upload packages to the Brane instance for use by scientists and other software engineers.

First, in the next section, we will give a bit of background that will help you understand what you're doing. Then, in the next chapter, we will help you preparing your local machine for Brane package development.

Background & Terminology

In Brane, every kind of job that is executed is done so by submitting a workflow. This is simply a high-level specification of which external functions will be called in what order, and how data is passed between them.

You may think of them as a program, except that it's meant to be more high-level and abstracted over the actual algorithms that are run part of the execution.

That means that the bulk of the work will be done in these external function calls. Because of this modularity present in these workflows, Brane collects these functions in packages, which may be used in zero or more workflows as independent compute steps.

Technically, these packages are implemented as containers, which means that they might be written in any language (as long as they adhere to the protocol Brane uses to communicate with packages) and will ship together with all required dependencies.

As a consequence, this means that Brane package calls are, in principle, always completely self-contained. After execution, the container is destroyed, removing any work that the package has done. The only way to retrieve results is by either sending them back to the workflow-space directly as a return value (which can contain limited data), or by returning so-called datasets or intermediate results (see the scientist chapters for more background information, or the software engineer's data chapter for practical usage).

Next

Before we will go more in-depth on the functionality and process of developing Brane packages, we will first walk you through setting up your machine for development in the next chapter.

Then, in the chapter after that, we will discuss the different types of packages supported by Brane and how to create them.

Installation

To develop Brane packages, you will need three components:

- The Brane Command-Line Interface (Brane CLI), which you use to package your code and publish it to an instance

- A Docker engine, which is used to build the package containers by the Brane CLI

- Support for your language of choice

The third component, the language support, is hard to generalize as it will depend on the language you choose. However, there is an import difference in setup between interpreted languages and compiled languages.

For interpreted languages, (such as Python), you should setup your machine in such a way that it is able to run the scripts locally (for development purposes). Additionally, you should make sure that you have some way of installing the interpreter (and any dependencies) on Ubuntu (since the Brane containers are based on that OS).

For compiled languages (such as Rust), you should prepare your machine to not only develop but also compile the language for use in an Ubuntu container. Then, you should only package the resulting binaries so that the package container remains as lightweight as possible.

The other two prerequisites will be discussed below.

The Docker engine

First, you should install Docker on the machine that you will use for development. Brane will use this to build the containers, since Docker features an excellent build system. However, Brane also requires you to have the BuildKit plugin installed on top of the normal Docker build system.

To install Docker, refer to their official documentation (macOS, Ubuntu, Debian or Arch Linux). Note that, if you install Docker on Linux, you should make sure that you can execute Docker commands without sudo (see here, first section) Then, you should install the Buildkit plugin by running the following commands:

# Clone the repo, CD into it and install the plugin (check https://github.com/docker/buildx for alternative methods if that fails)

git clone https://github.com/docker/buildx.git && cd buildx

make install

# Switch to the buildx driver

docker buildx create --use

The Brane CLI

With Docker installed, you may then install the Brane Command-Line Interface.

You can either download the binary directly from the repository, or build the tool from scratch. The first method should be preferred in most cases, which the latter is only required if you require a non-released version or run Brane on non-x86_64 hardware.

Note that you probably already installed the Brane Command-Line Interface if you've installed a node on your local machine (follow this guide, for example).

Downloading the binary

To download the Brane CLI binary, use the following commands:

# For Linux

sudo wget -O /usr/local/bin/brane https://github.com/epi-project/brane/releases/latest/download/brane-linux-x86_64

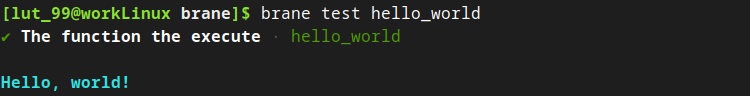

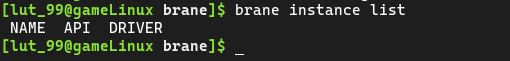

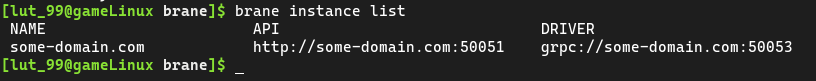

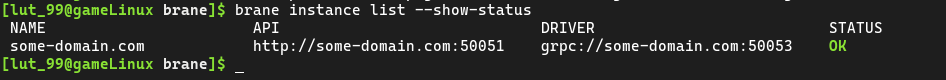

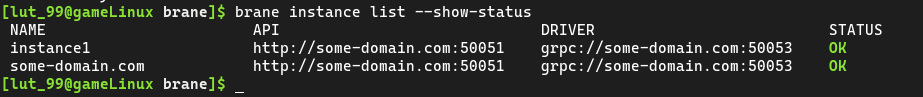

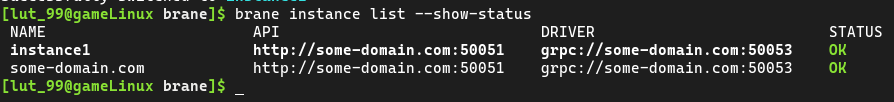

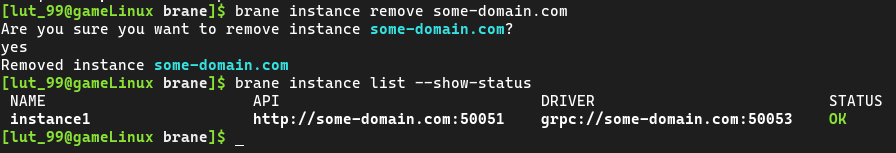

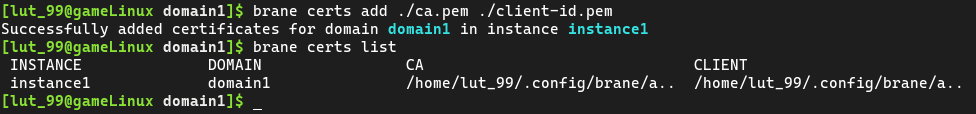

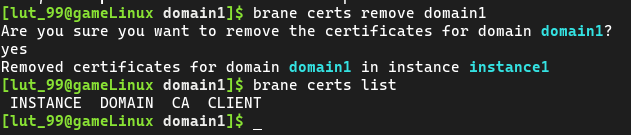

# For macOS (Intel)