Brane: A Specification

This book is still in development. Everything is currently subject to change.

This book documents the workings of the Brane framework, so it may be maintained easily by future parties or further the understanding of users who want to know exactly what they are working with. Note, however, that this book is not a code documentation, nor is it a user guide; we solely focus on its design. It can be thought of as a kind of (informal) specification of a Brane framework.

For a how to work with the framework, please check the Brane: The User Guide book: it describes how to work with Brane for all four of the framework's target roles (system engineers, policy experts, software engineers and scientists).

Code documentation is provided by the Rust automated documentation generation (cargo doc). A version generated for BRANE 2.0.0 on x86-64 machines can be found hosted here. For other versions or platforms, check the the source code itself.

To start, first head over to the Overview page, which talks about Brane in general and how this book will look like. From there, you can select topics that are most of interest.

Attribution

The icons used in this book (![]() ,

, ![]() ,

, ![]() ) are provided by Flaticon.

) are provided by Flaticon.

Overview of the Brane framework

The Brane framework is an infrastructure designed to facilitate computing over multiple compute sites, in a site-agnostic way. It thus acts as a middleware between a workflow that a scientist wants to run and various compute facilities with different capabilities, hardware and software.

Although originally developed as a general purpose workflow execution system, it has recently been adopted for use in healthcare as part of the Enabling Personalized Interventions (EPI) project. To this end, the framework is being adapted and redesigned with a focus on working with sensitive datasets owned by different domains, and keeping the datasets in control of the data.

Brane is federated, which means that is has a centralised part that acts as an orchestrator that mediates work between decentralised parts that are hosted on participating domains. As such, they are called the orchestrator and a domain, respectively.

The reason that Brane is called a framework is that domains are free to implement their part of the framework as they wish, as long as they adhere to the overall framework interface. Moreover, different Brane instances (i.e., a particular orchestrator with a particular set of domains) can have different implementations, too, allowing it to be tailored to a particular use case's needs.

Book layout

We cover a number of different aspects of Brane's design in this book, separated into different groups of chapters.

First, in the development-chapters, we detail how to setup your laptop such that you can develop Brane. Part of this is compiling the most recent version of the framework.

In design requirements, we will discuss the design behind Brane and what motivated decisions made. Here, we will layout the parculiarities of Brane's use case and which assumptions where made. This will help to contextualise the more technical discussion later on.

The series on implementation details how the requirements are implemented by discussing notable implementation decisions on a high-level. For low-level implementation, refer to the auto-generated Brane Code Documentation.

In framework specification, we provide some lower-level details on the "specification" parts of the framework. In essence, this will give technical interfaces or APIs that allow components to communicate with each other, even if they have a custom implementation.

Finally, we discuss future work for Brane: ideas not yet implemented but which we believe are essential or otherwise very practical.

In addition to the main story, there is also the Appendix which discusses anything not part of the official story but worth noting. Most notably, the specification of the custom Domain-Specific Language (DSL) of Brane is given here, BraneScript.

Introduction

These chapters detail how to prepare your environment for developing Brane.

The compilation-chapter discusses which dependencies to install, and then what to do, to be able to compile Brane binaries and services.

Next

You can select the specific development chapter above or in the sidebar to the left.

Alternatively, you can switch topics altogether using the same sidebar.

Compiling binaries

This chapter explains how to compile the various Brane components.

First, start by installing the required dependencies.

Then, if you are coming from the scientist- or software engineer-chapters, you are most likely interested in compiling the brane CLI tool.

If you are coming from the administrator-chapters, you are most likely interested in compiling the branectl CLI tool and the various instance images.

People who aim to either run the Brane IDE or automatically compile BraneScript in another fashion, should checkout the section on libbrane_cli and branec.

Dependencies

If you want to compile the framework yourself, you have to install additional dependencies to do so.

Various parts of the framework are compiled differently. Most notably:

- Any binaries (

brane,branectl,branecorlibbrane_cli.so) are compiled using Rust'scargo; and - Any services (i.e., nodes) are compiled within a Docker environment.

Depending on which to install, you may need to install the dependencies for both of them.

Binaries - Rust and Cargo

To compile Rust code natively on your machine, you should install the language toolchain plus any other dependencies required for the project. An overview per supported OS can be found below:

Windows (brane, branec and libbrane_cli.so only)

- Install Rust and its tools using rustup (follow the instructions on the website).

- Install the Visual Studio Tools to install the required Windows compilers. Installing the Build Tools for Visual Studio should be sufficient.

- Install Perl (Strawberry Perl is fine) (follow the instructions on the website).

macOS

- Install Rust and its tools using rustup:

Or, if you want a version that installs the default setup and that does not ask for confirmation:# Same command as suggested by Rustup itself curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | shcurl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh -s -- --profile default -y - Install the Xcode Command-Line Tools, since that contains most of the compilers and other tools we need

# Follow the prompt after this to install it (might take a while) xcode-select --install

The main compilation script,

make.py, is tested for Python 3.7 and higher. If you have an older Python version, you may have to upgrade it first. We recommend using some virtualized environment such as pyenv to avoid breaking your OS or other projects.

Ubuntu / Debian

- Install Rust and its tools using rustup:

Or, if you want a version that installs the default setup and that does not ask for confirmation:# Same command as suggested by Rustup itself curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | shcurl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh -s -- --profile default -y - Install the packages required by Brane's dependencies with

apt:sudo apt-get update && sudo apt-get install -y \ gcc g++ \ cmake

Arch Linux

- Install Rust and its tools using rustup:

Or, if you want a version that installs the default setup and that does not ask for confirmation:# Same command as suggested by Rustup itself curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | shcurl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh -s -- --profile default -y - Install the packages from

pacmanrequired by Brane's dependenciessudo pacman -Syu \ gcc g++ \ cmake

Services - control, worker and proxy nodes

The services are build in Docker. As such, install it on your machine, and it should take care of the rest.

Simply follow the instructions on https://www.docker.com/get-started/ to get started.

Compilation

With the dependencies install, you can then compile the parts of the framework of your choosing.

For all of them, first clone the repository. Either download it from Github's interface (the green button in the top-right), or clone it using the command-line:

git clone https://github.com/epi-project/brane && cd brane

Then you can use the make.py script to install what you like:

- To build the

braneCLI tool, run:python3 make.py cli - To build the

branectlCLI tool, run:python3 make.py ctl - To build the

branecBraneScript compiler, run:python3 make.py cc - To build the

libbrane_cli.sodynamic library, run:python3 make.py libbrane_cli - To build the servives for a control node, run:

python3 make.py instance - To build the servives for a worker node, run:

python3 make.py worker-instance - To build the servives for a proxy node, run:

python3 make.py proxy-instance

Note that compiling any of these will result in quite large build caches (order of GB's). Be sure to have at least 10 GB available on your device before you start compiling to make sure your OS keeps functioning.

For any of the binaries (brane, branectl and branec), you may make them available in your PATH by copying them to some system location, e.g.,

sudo cp ./target/release/brane /usr/local/bin/brane

to make them available system-wide.

Troubleshooting

This section lists some common issues that occur when compiling Brane.

-

I'm running out of space on my machine. What to do?

Both the build caches of Cargo and Docker tend to accumulate a lot of artefacts, most of which aren't used anymore. As such, it can save a lot of space to clear them.For Cargo, simply remove the

target-directory:rm -rf ./targetFor Docker, you can clear the Buildx build cache:

docker buildx prune -af The above command clears all Buildx build cache, not just Brane's. Use with care.

The above command clears all Buildx build cache, not just Brane's. Use with care.It can also sometimes help to prune old images or containers if you're building new versions often.

Do note that running either will re-trigger a new, clean build, and therefore may take some time.

-

Errors like

ERROR: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?orunix:///var/run/docker.sock not foundwhen building services

This means that your Docker engine is most likely not running. On Windows and macOS, start it by starting the Docker Engine application. On Linux, run:sudo systemctl start docker -

Permission deniederrors when building services (Linux)

Running Docker containers may be a security risk because it can be used to edit any file on a system, permission or not. As such, you have to be added to thedocker-group to run it without usingsudo.If you are managing the machine you are running on, you can run:

sudo usermod -aG docker "$USER"Don't forget to restart your terminal to apply the change, and then try again.

Design requirements

In this series of chapters, we present the main context for Brane: its rationale, the setting and use-case for which it is developed and, finally, the design restrictions and requirements for designing it.

First, in the background chapter, the context and rationale of Brane is explained. Then, we discuss the setting and use-case of Brane, which then allows us to state a set of restrictions and requirements for its design.

If you are eager to read to how Brane actually works, you can also skip ahead to the design series of chapters.

Background

This chapter will be written soon.

Context & Use-case

This chapter will be written soon.

Requirements

In the first- and second chapters in this series, we defined the context and use cases for which Brane was designed. With this in mind, we can now enumerate a set of requirements and assumptions that Brane has to satisfy.

The assumptions defined in this chapter may be referred to later by their number; for example, you may see

This is because ... (Assumption A2)or

This is a consequence of ... (Requirement B1)used in other chapters in this book to refer to assumptions and requirements defined here, respectively.

Usage in High-Performance Computing (HPC)

Brane's original intended purpose was to homogenise access to various compute clusters and pool their resources. Moreover, the original project focused on acknowledging the wide range of knowledge required to do HPC and streamline this process for all parties involved. As such, it is a framework that is intended to do heavy-duty work on large datasets with a clean separation of concerns.

First, we specify more concretely what kind of work Brane is intended to perform:

Assumption A1

HPC nowadays mostly deals with data processing pipelines, and as such Brane must be able to process these kind of pipelines (described by workflows).

However, as the data pipelines increase in complexity and required capacity, it becomes more and more complex to design and execute the workflows representing these pipelines. It is assumed that this work can be divided into the following concerns:

Assumption A2

To design and execute a data pipeline, expertise is needed from a (system) administrator, to manage the compute resources; a (software) engineer, to implement the required algorithms; and a (domain) scientist, to design the high-level workflow and analyse the results.

Tied to this, we state the following requirement on Brane:

Requirement A1

Brane must be easy-to-use and familiar to each of the roles representing the various concerns.

This leads to the natural follow-up requirement:

Requirement A2

To fascilitate a clear separation of concerns, the roles must be able to focus on their own aspects of the workflow; Brane has the burden to bring everything together.

Requirement A2 reveals the main objective of the Brane framework as HPC middleware: provide intuitive interfaces for all the various roles (the frontend) and combine their efforts into the execution of a workflow on various compute clusters (the backend).

Further, due to the nature of HPC, we can require that:

Requirement A3

Brane must be able to handle high-capacity workflows that require large amounts of compute power and/or process large amounts of data.

Finally, we can realise that:

Assumption A3

HPC covers a wide variety of topics and therefore use-cases.

Thus, to make Brane general, it must be very flexible:

Requirement A4

Brane must be flexible: it must support many different, possibly use-case dependent frontends and as many different backends as possible.

To realise the last requirement, we design Brane as a loosely-coupled framework, where we can define frontends and backends in isolation as long as they adhere to the common specification.

Usage in healthcare

In addition to the general HPC requirements presented in the previous section, Brane is adapted for usage in the medical domain (see background). This introduces new assumptions and requirements that influence the main design of Brane, which we discuss in this section.

Most importantly, the shift to the medical domain introduces a heterogeneity in data access. Specifically, we assume that:

Assumption B1

Organisations often maximise control over their data and minimize their peers' access to their data.

This is motivated by the often highly-sensitive nature of medical data, combined with the responsibilities of the organisations owning that data to keep it private. Thus, the organisations will want to stay in control and are typically conservative in sharing it with other domains.

From this, we can formulate the following requirement for Brane:

Requirement B1

Domains must be as autonomous as possible; concretely, they cannot be forced by the orchestrator or other domains (not) to act.

To this end, the role of the Brane orchestrator is weakened from instructing what domains do to instead requesting what domains do. Put differently, the orchestrator now merely acts as an intermediary trying to get everyone to work together and share data; but the final decision should remain with the domains themselves.

In addition to maximising autonomy, recent laws such as GDPR [1] open the possibility that rules governing data (e.g., institutional policies) may be privacy-sensitive themselves. For example, Article 17.2 of the GDPR states that "personal data shall, with the exception of storage, only be processed with the data subject's consent". However, if this consent is given for data with a public yet sensitive topic (e.g., data about the treatment of a disease), then it can easily be deduced that the data subject's consent means that the patient suffers from that disease.

This leads to the following assumption:

Assumption B2

Rules governing data access may be privacy-sensitive, or may require privacy-sensitive information to be resolved.

This prompts the following design requirement:

Requirement B2

The decision process to give another domain access to a particular domain's data must be kept as private as possible.

Note, however, that by nature of these rules it is impossible to keep them fully private. After all, there is exist attacks to reconstruct the state of a state machine by observing its behaviour [2]; which means that enforcing the decision process necessarily offers opportunity for any attacker to discover them. However, Brane can give opportunity for domains to hide their access control rules by implementing an indirection between the internal behaviour of the decision process and an outward interface (see [3] for more information).

Because it becomes important to specify the rules governing data access properly and correctly, we can extend Assumption A2 to include a fourth role (the extension is emphasised):

Assumption B3

To design and execute a data pipeline, expertise is needed from a (system) administrator, to manage the compute resources; a policy expert, to design and implement the data access restrictions; a (software) engineer, to implement the required algorithms; and a (domain) scientist, to design the high-level workflow and analyse the results.

Finally, for practical purposes, though, the following two assumptions are made that allow us a bit more freedom in the implementation:

Assumption B4

A domain always adheres to its own data access rules.

(i.e., domains will act rationally and to their own intentions); and

Assumption B5

Whether data is present on a domain, as well as data metadata, is non-sensitive information.

(i.e., the fact that someone has a particular dataset is non-sensitive; we only consider the access control rules to that data and its contents as potentially sensitive information).

Next

In this chapter, we presented the assumptions and requirements that motivate and explain Brane. This builds upon the general context and use-cases of Brane, and serves as a background for understanding the framework.

Next, you can start examining the design in the Brane design chapter series. Alternatively, you can also skip ahead to the Framework specifiction if you are more implementation-oriented.

Alternatively, you can also visit the Appendix to learn more about the tools surrounding Brane.

References

[1] European Commission, Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation) (Text with EEA relevance) (2016).

[2] F. W. Vaandrager, B. Garhewal, J. Rot, T. Wiẞmann, A new approach for active automata learning based on apartness, in: D. Fisman, G. Rosu (Eds.), Tools and Algorithms for the Construction and Analysis of Systems - 28th International Conference, TACAS 2022, Held as Part of the European Joint Conferences on Theory and Practice of Software, ETAPS 2022, Munich, Germany, April 2-7, 2022, Proceedings, Part I, Vol. 13243 of Lecture Notes in Computer Science, Springer, 2022, pp. 223–243. doi:10.1007/978-3-030-99524-9_12. URL http://dx.doi.org/10.1007/978-3-030-99524-9_12

[3] C. A. Esterhuyse, T. Müller, L. T. van Binsbergen and A. S. Z. Belloum, Exploring the enforcement of private, dynamic policies on medical workflow execution, in: 18th IEEE International Conference on e-Science, e-Science 2022, Salt Lake City, UT, USA, October 11-14, 2022, IEEE, 2022, pp. 481-486

Introduction

Bird's-eye view

In this chapter, we provide a bird's-eye view of the framework. We introduce its centralised part (the orchestrator), decentralised part (a domain) and the components that each of them make up.

The components are introduced in more detail in their respective chapters (see the sidebar to the left). Here, we just globally specify what they do to understand the overarching picture.

Finally, note that the discussion in this chapter is on the current implementation, as given in the framework repository. Future work is discussed in its own series of chapters, which is a recommended read for anyone trying to do things like bringing Brane in production.

The task at hand

As described in the Design requirements, Brane is primarily designed for processing data pipelines in an HPC setting. In Brane, these data pipelines are expressed as workflows, which can be thought of as high-level descriptions of a program or process where individual tasks are composed into a certain control flow, linking inputs and outputs together. Specific details, such as where a task is executed or exploiting parallelism, is then (optionally) left to the runtime (Brane, in this case) to deduce automatically.

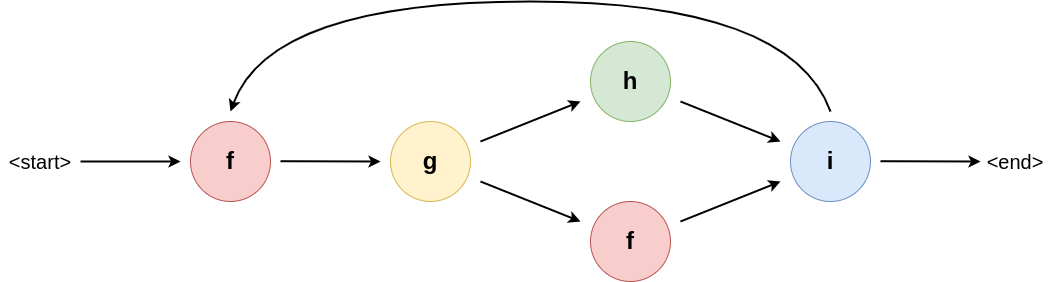

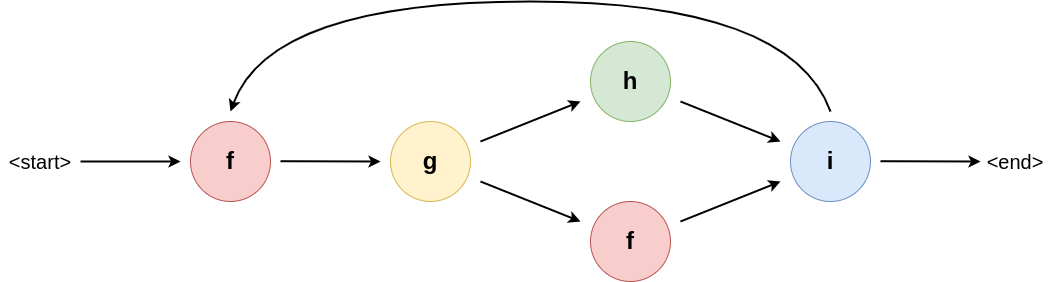

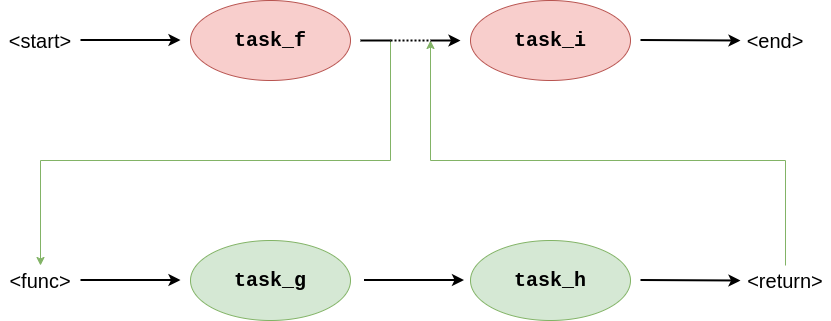

A workflow is typically represented as a graph, where nodes indicate tasks and edges indicate some kind of dependency. For Brane, this dependency is data dependencies. An example of such a graph can be found in Figure 1.

Figure 1: An example workflow graph. The node indicate a particular function, where the arrows indicate how data flows through them (i.e., it specifies data dependencies). Some nodes (i in this example), may influence the control flow dynamically to introduce branches or loops.

At runtime, Brane will first plan the workflow to resolve missing runtime information (e.g., which task is executed where). Then, it starts to traverse the workflow, executing each task as it encounters them until the end of the workflow is reached.

The tasks, in turn, can be defined separately as some kind of executable unit. For example, these may be executable files or containers that can be send to a domain and executed on their compute infrastructure. This split in being able to define workflows separately from tasks aligns well with Brane's objective of separating the concerns (Assumption B3) between the scientists and software engineers.

A federated framework

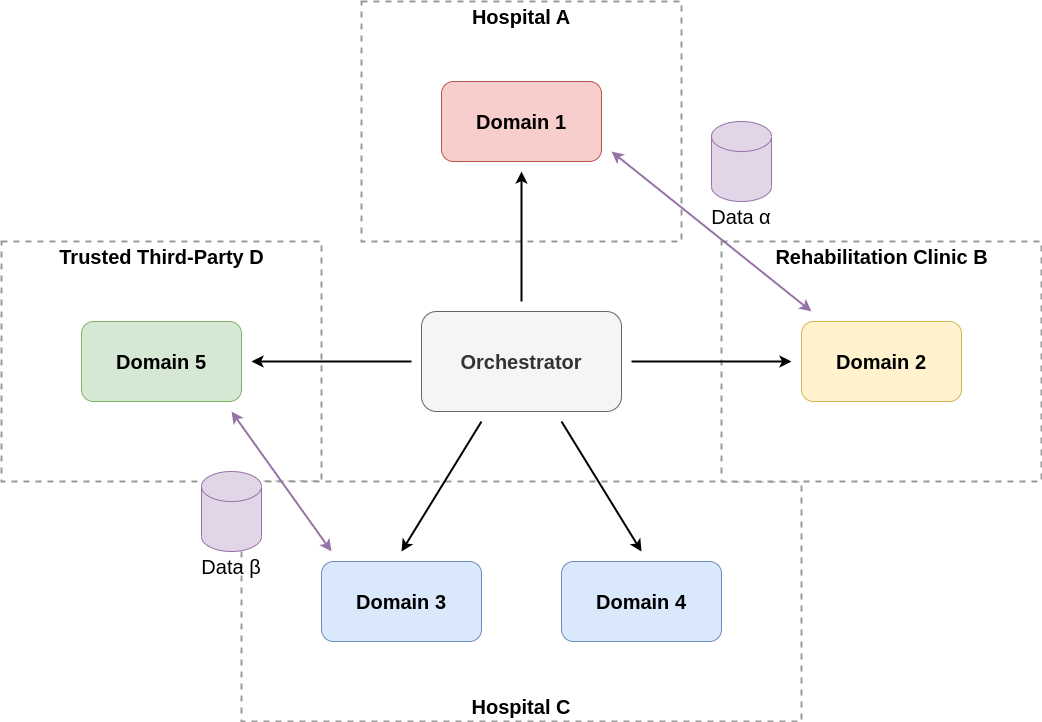

Figure 2: An overview of the framework services and how information flows between them. The Brane orchestrator communicates with domains to have them execute jobs or transfer data. Every domain can be thought of as one organisation, but not necessarily distinct (e.g., Hospital C has multiple domains).

Brane is a federated framework, with a centralised orchestrator that manages the work that decentralised domains perform (see Figure 2). This orchestrator does so by sending control messages to various domains, which encodes requests to perform a task on a certain data or exchange certain data with other domains. Importantly, these control messages only contain data metadata, which is assumed to be non-sensitive (Assumption B5); the actually sensitive contents of the data is only exchanged between domains when the domains decide to do so.

In this design, in accordance with Requirement B1, Brane does not attempt to control the domains themselves. Instead, it defines the orchestrator and what kind of behaviour the domain should display if it's well-behaved. However, because of the autonomy of the domains, no guarantees can be made about whether the domains are actually well-behaved; and as such, Brane is designed to allow well-behaving domains to deal with misbehaving domains.

The components of Brane

The Brane framework is defined in terms of components, each of which has separate responsibilities in achieving the functionality of the framework as a whole. They could be thought of as services, but components are more high-level; in practise, a component can be implemented by many services, or a single service may implement multiple components.

Because Brane is federated, the components are grouped similarly. There are central components, which live in the orchestrator and work on processing workflows and emitting (compute) events; and there are local components that live in each domain and work on processing the emitted events.

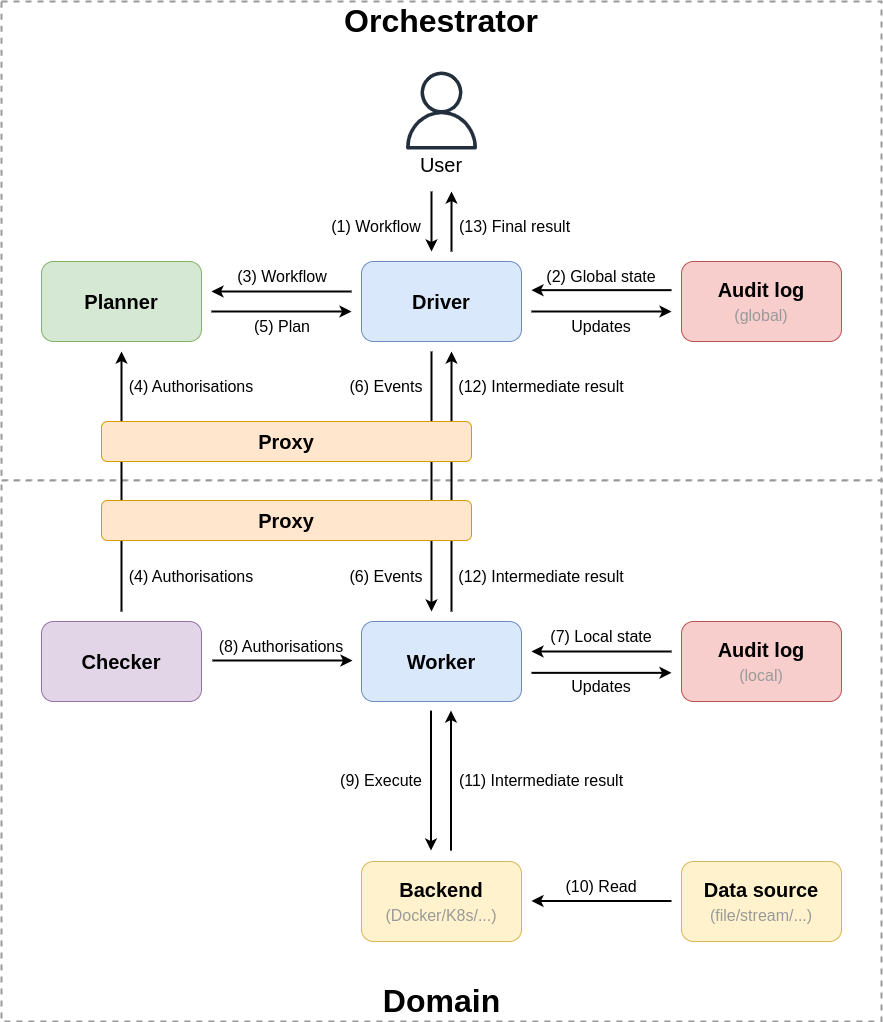

An overview of the components can be found in Figure 3.

Figure 3: An overview of Brane's components, separated in the central plane (the orchestrator) and the local plane (the domains). Each node represents a component, and each arrow some high-level interaction between them, where the direction indicates the main information flow. The annotation of the arrows give a hint as to what is exchanged and in what order. Finally, the colours of the nodes indicate a flexible notion of similarity.

Central components

The following components are defined at the central orchestrator:

- The driver acts as both the entrypoint to the framework from a user's perspective, and as the main engine driving the framework operations. Specifically, it takes a workflow and traverses it to emit a series of events that need to be executed by, or otherwise processed on, the domains.

- The planner takes a workflow submitted by the user and attempts to resolve any missing information needed for execution. Most notably, this includes assigning tasks to domains that should execute them and input to domains that should provide them. However, this can also include translating internal representations or adding additional security. Finally, the planner is also the first step where policies are consulted.

- The global audit log keeps track of the global state of the system, and can be inspected both by global components and local components (the latter is not depicted in the figure for brevity).

- The proxy acts as a gateway for traffic entering or leaving the node. It is more clever than a simple network proxy, though, as it may also enforce security, such as client authentication, and acts as a hook for sending traffic through Bridging Function Chains.

Local components

Next, we introduce the components found on every Brane domain. Two types of components exist: Brane components and third-party components.

First, we list the Brane components:

- The worker acts as a local counterpart to the global driver. It takes events emitted by the driver and attempts to execute them locally. An important function of the worker is to consult various checkers to ensure that what it does it in line with domain restrictions on data usage.

- The checker is the decision point where a domain can express restrictions on what happens to data they own. At the least, because of Assumption B4, the checker enforces its own worker's behaviour; but if other domains are well-behaved, the checker gets to enforce their workers too. As such, the checker must also reason about which domains it expects to act in a well-behaved manner and thus allow them to access its data.

- The local audit log acts as the local counterpart of the global audit log. As the global log keeps track of the global state, the local log keeps track of local state; and together, they can provide a complete overview of the entire system.

- The proxy acts as a gateway for traffic entering or leaving the node. It is more clever than a simple network proxy, though, as it may also enforce security, such as client authentication, and acts as a hook for sending traffic through Bridging Function Chains.

The third-party components:

- The backend is the compute infrastructure that can actually execute tasks and process datasets. Conceptually, every domain only has one, although in practise it depends on the implementation of the worker. However, they are all grouped as one location as far as the planner is concerned.

- The data sources are machines or other sources that provide the actual data on which the tasks are executed. Their access is mostly governed by checkers, and they can supply data in many forms. From a conceptual point of view, every data source is a dataset, which can be given as input to a task - or gets created when a task completes to represent an output.

Implementing components

In the reference implementation, the components discussed in the previous section are implemented by actual services. These really are concrete programs that may run on a set of hosts to implement the functionality of the Brane framework.

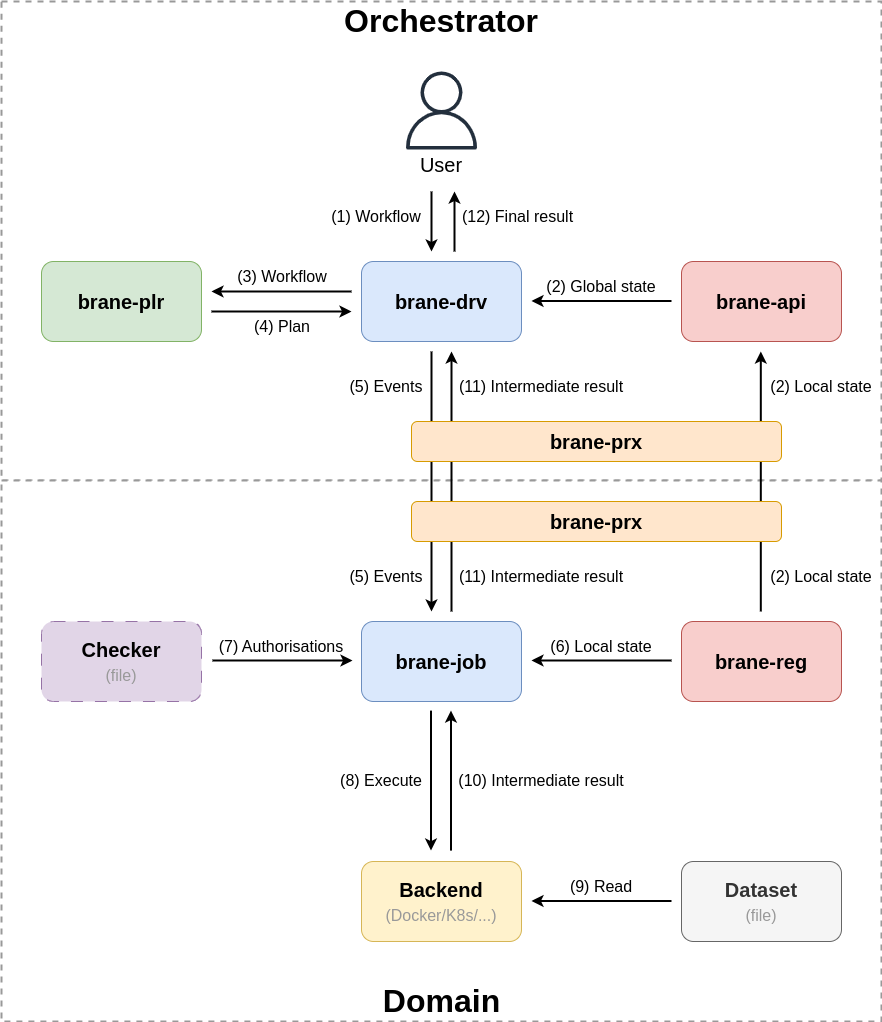

Figure 4: An overview of Brane's services as currently implemented. Every node now represents a service, at the identical location as the component they implement but with the name used in the repository. Otherwise, the figure can be read the same way as Figure 3.

Figure 4 shows a similar overview as Figure 3, but then for the concrete implementation instead of the abstract components. Important differences are:

- Checkers are purely virtual, and implemented as a static file describing the policies.

- Planners do not consult the domain checker files, but instead create plans without knowledge of the policies. Instead, the planner relies on workers refusing to execute unauthorised plans.

- The audit logs are simplified as registries, of which the global one (

brane-api) mirrors the local ones (brane-reg) for most information. - Data is only available as files or directories, not as generic data sources.

Next

The remainder of this series of chapters will mostly discuss the functionality of the reference implementation. To start with, head over to the description of the individual services, or skip ahead to which protocols they implement.

After this, the specification defines how the various components can talk to each other in terms such that new services implementing the components may be created. Then, in the Future work series of chapters, we discuss how the reference implementation can be improved upon to reflect the component more accurately and practically.

Aside from that, you can also learn more about the context of Brane or its custom Domain-Specific Language, BraneScript.

Virtual Machine (VM) for the WIR

An important part of the the Brane Framework is the execution engine for the Workflow Intermediate Representation (WIR). Because the WIR is implemented as a binary-like set of edges and instructions, this execution engine is known as a Virtual Machine (VM) because it emulates a full set of resources needed to execute the WIR.

In these chapters, we will discuss the reference implementation of the VM. Note that it is assumed that you are already familiar with the WIR and have read the matching chapters in this book.

VM with plugins

In Brane, a workflow might be executed in different contexts, which changes what effects a workflow has.

In particular, if we think of executing a workflow as translating it to a series of events that must be processed in-order, then how we process these events differs on the execution environment. In brane-cli, this environment is on a single machine and for testing purposes only; whereas in brane-drv, the environment is a full Brane instance with multiple domains, different backends and policies to take into account.

To support this variety of use-cases, the VM has a notion of plugins that handle the resulting events. In particular, the traversal and processing of a workflow is universalised in the base implementation of the VM. Then, the processing of any "outward-facing" events (e.g., task processing, file transfer, feedback to user) is delegated to configurable pieces of code that handle these different based on the use-case.

Source code

In the reference implementation, the base part of the VM is implemented in the brane-exe-crate. Then, brane-cli implements plugins for a local, offline execution, whereas brane-drv implements the plugins for the in-instance, online execution. Finally, a set of dummy plugins for testing purposes exists in brane-exe as well.

Next

In the next chapter, we start by considering the main structure and overview of the VM, including how the VM handles threads and plugins. Then, we examine various implementations of subsystems: the expression stack (including the VM's notion of values), the variable register and the frame stack (including virtual symbol tables).

If you're interesting in another topic than the VM, you can select it in the sidebar to the left.

Overview

In this chapter, we discuss the main operations of the Brane VM.

Note that we mostly focus on the global operations, and not on how specific Edges or EdgeInstructions of the WIR are executed. Those are implemented as discussed in the WIR specification (mostly).

Running threads

In its core, the VM is build as a request processing system, since that is the main functionality of the brane-drv: workflows come in as disjoint requests that can be processed separately and in parallel.

As such, the VM has to balance multiple threads of execution. Each of these represent a single workflow or, more low-level, a single graph of edges that the VM has to traverse. However, these threads are completely isolated from each other; it is assumed that they do not interfere with each other during execution. This is made possible by considering datasets to be immutable1.

Practically, the threads of the VM are executed as Rust Futures (roughly analogous to JavaScript Promises). This means that no explicit management of threads is found back in the codebase, or indeed in the rest of the description of the VM (parallel-statements excempted). Instead, they are handled implicitly by virtue of multiple requests coming in in parallel.

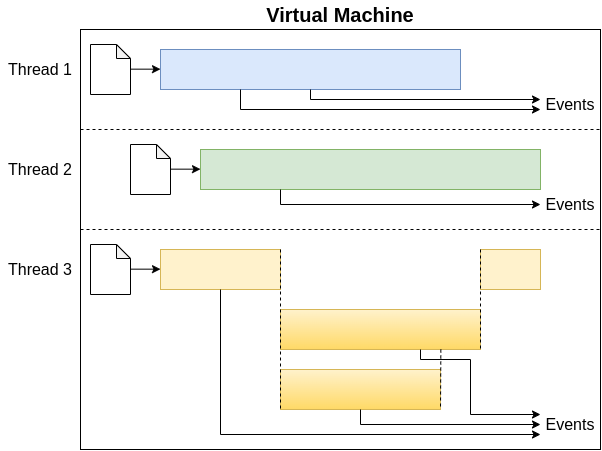

Every thread, then, acts as a single-minded VM executing only a single workflow. This representation is visualized in Figure 1.

Figure 1: Visualisation of the Virtual Machine and its threads. The VM executes multiple threads in parallel, each originating from its own workflow. Threads themselves then unspool a workflow into a series of events, such as giving feedback to the user or executing tasks. Sometimes, threads may also be spawned as the result of a fork (e.g., parallel statements; thread 3).

Practically, however, datasets aren't completely immutable because their identifier can be re-bound to a new version of it. That way, threads can interfere with each other by re-assigning the identifier as another thread wants to use it. This is not fixed in the reference implementation.

Unspooling workflows

Threads process workflows as graphs. Given one (or given a sub-part of the workflow, as in the case of fork), they traverse the graph as dictated by its structure. Because workflows can be derived from scripting-like languages, the traversing can include dynamic control flow decisions. To this end, a stack is used to represent the dynamic state, as well as a variable register, to emulate variables, and a frame stack, to support an equivalent of function calls.

As the workflow gets traversed, threads encounter certain nodes that require emitting certain events; this are first emitted internally to be caught by the chosen plugin. Then, the plugin re-emits them for correct processing: for example, this can be emitted as a print-statement for the user or as a task execution on a certain domain.

How the workflow is traversed exactly is described in the WIR specification.

VM plugins

As mentioned in the previous chapter, does the VM rely on plugins to change how it emits events that occur as the workflow is processed. These plugins change, for example, how a task is scheduled; in the local case, it is simply pushed to the local container daemon, whereas in the global case, it is sent as a request to the domain in question.

The plugins are implemented through a Rust trait (i.e., an interface). Then, which plugin is used can be changed statically by assigning a different type to the VM when it is instantiated; the compiler will then take care to generate a version of the VM that uses the given plugin. Because this is done statically, it is very performant but not very flexible (i.e., plugins cannot be changed at runtime, only at compile time).

Next

With this overview in mind, we will next examine the expression stack, before continuing with the variable register and frame stack.

You can also select another topic in the sidebar on the left.

Expression stack

In this chapter, we will discuss the reference implementation's implementation of the expression stack for the Virtual Machine (VM) globally introduced in the previous chapter. Because it is a thread that will execute a single workflow, we will discuss the stack's behaviour in the context of a thread.

First, we will discuss the basic view of the stack, after which we discuss the stack's notion of values (including dynamic popping).

Basic view

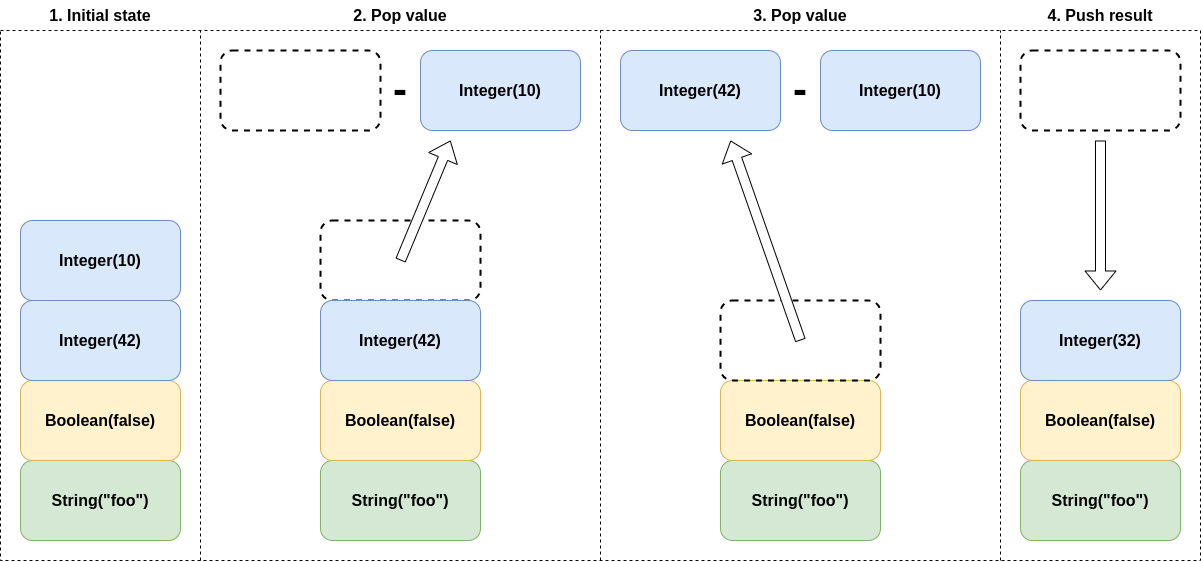

The stack acts as a scratchpad memory for the thread to which it belongs. As the thread processes graph edges and their attached instructions, these may push values on the stack of pop values from them. This is done in FIFO-order, i.e., pushing adds new values on top of the stack, whereas popping removes them in reverse order (newest first). Typically, instructions consume values, perform some operation on them and then push them back. This is visualised in Figure 1.

Figure 1: Visualisation of an arithmetic operation (subtraction) on the stack. Operations are defined as rewrite rules on the top values of the stack, popping input as necessary and pushing results as computed. In this example, note that possibly counter-intuitive ordering of arguments due to the FIFO nature of the stack.

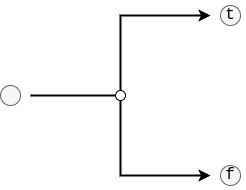

Some edges, then, may introduce conditionality by taking one of multiple next possible branches in the tree. Which branch is taken then depends on the top value of the stack, which typically uses a boolean true to denote that the branch should be taken or a boolean false to denote it shouldn't.

Note that the stack in the implementation is relatively simple. It is bounded to a static size (allowing Stack overflow errors to potentially occur), and it does not store large objects, such as strings, arrays and instances, by reference but instead by value. This means that, everytime an operation is performed on such an object, the object needs to be re-created or copied instead of simply pushing a handle to it.

Value's and FullValue's

The things stored on the stack are valled values, and represent one particular value that is used or mutated in expressions and/or stored in variables (see the next chapter). Specifically, values in the stack are typed, and may be of any data type defined in the WIR except any grouping of types, including DataType::Any. This latter implies that all types will be resolved at runtime, even if they aren't always known beforehand in the given workflow.

Note, however, that the values on the stack do not embed their type information completely. Customized information, such as a class definition or function header, lives on the frame stack and is scoped to the function that we're calling. As such, values only contain identifiers to the specific definition currently in scope.

There exists a variant of value's, full values, which do embed their definitions. These are used when values are communicated outside of the VM, such as return values of workflows.

Dynamic popping

A special case of value on the stack is a dynamic pop marker, which is used in dynamic popping. This is used when the return value of a function cannot be typed at runtime, and an unknown number of items have to be popped from the stack after the function returns.

To this end, the pop marker is used. First, it is pushed to the stack before the function call; then, when executing a dynamic pop, the stack will simply pop values until it has popped the dynamic pop marker. For all other operations, the pop marker is invisible and acts as though the value below it is on top of the stack instead.

Next

In the next chapter, we discuss the next component of the VM, the variable register. The frame stack will be discussed after that.

If you like have an overview of what the VM does as a whole, check the previous chapter. You can also select a different topic altogether in the sidebar to the left.

Variable register

![]()

VariableRegister in brane-exe/varreg.rs./

In the previous chapter, we introduced the expression stack as a scratchpad memory for computing expressions as a thread of the VM executes a workflow. However, this isn't always suitable for all purposes. Most notably, the stack only allows operations on the top values; this means that if we want to re-use arbitrary values from before, this cannot be represented by the stack alone.

To this end, a variable register is implemented in the VM that can be used to temporarily store values on another location outside of the stack altogether. Then, the values in these variables can be pushed to the stack whenever they're required.

Implementation

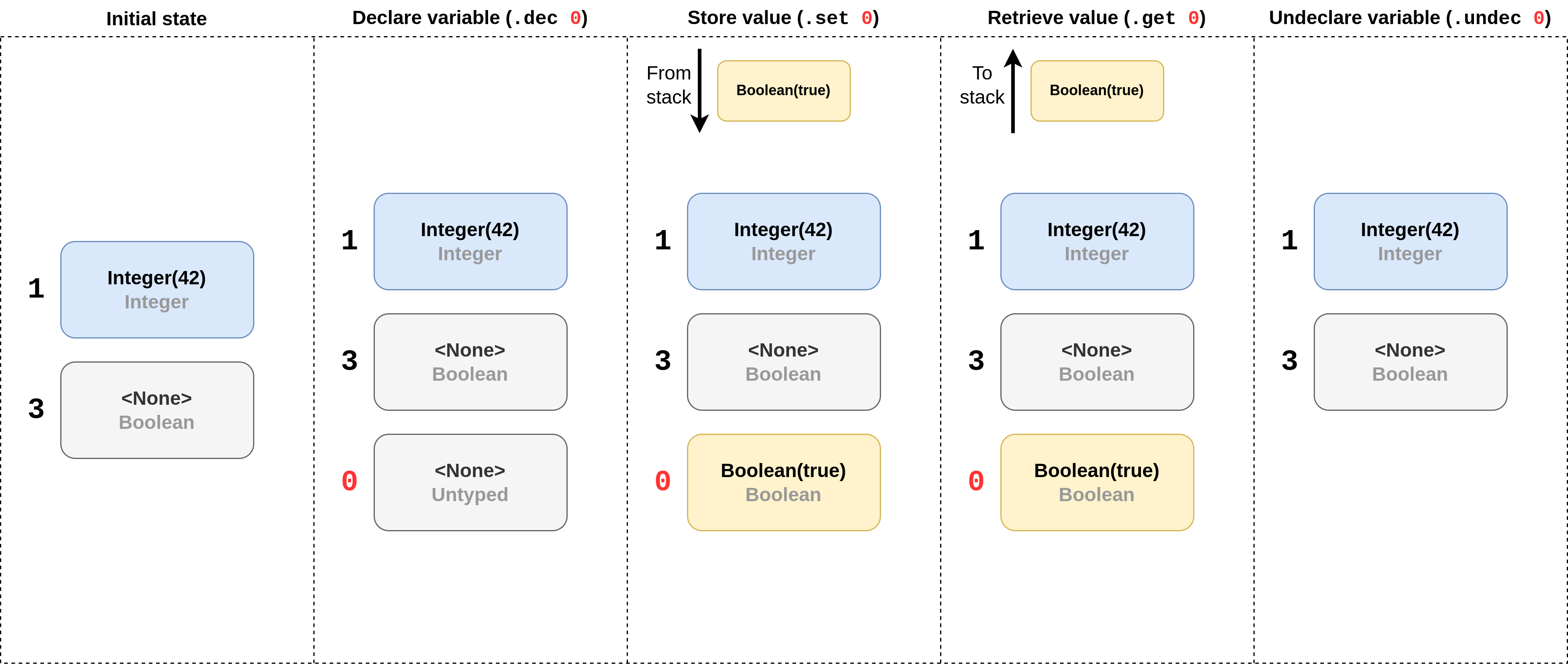

The variable register is implemented as an unordered map of variable identifiers to values. Like values, the variable register does not store variables by name but rather by definition, as found in the workflow's definition table. As such, the map is keyed by numbers indexing the table instead of its name. The definition in the definition table, then, defines the variable's name and type.

The variables in the register can have one of three states:

- Undeclared: the variable does not appear in the register at all;

- Declared but uninitialized: the variable appears in the register but have a null-value (Rust's

None-value); or - Initialized: the variable appears in the register and has an actualy value.

The reason for this is that variables are typed, and that they cannot change type. In most cases, variables will have their types annotated in their definition; but sometimes, if this cannot be determined statically, then a variable will assume the type of the first value its given until it is undeclared and re-declared again.

Figure 1: Visualisation of the possible variable register operations. Variables are stored by ID, and can either have a value or <None> to represent uninitialized. When a new variable is declared, the ID is looked up in the workflow definition table, and the slot is typed according to the variable's static type. Otherwise, (as is the case for 0), the type is set when the variable is initialized. Further operations must always use that type until the variable is undeclared and re-declared.

Next

The next chapter will see the discussion of the frame stack, the final of the VM's state-components. In the chapter after it, we will showcase how the components operate together when executing a simple workflow.

For an overview of the VM as a whole, check the first chapter in this series. You can also select a different topic altogether in the sidebar to the left.

Frame stack

![]()

FrameStack in brane-exe/frame_stack.rs.

In the previous chapters, the expression stack and the variable register were introduced. In this chapter, we will introduce the final state component of the VM: the frame stack. After this, we have enough knowledge to examine a full VM execution in the final chapter of this series.

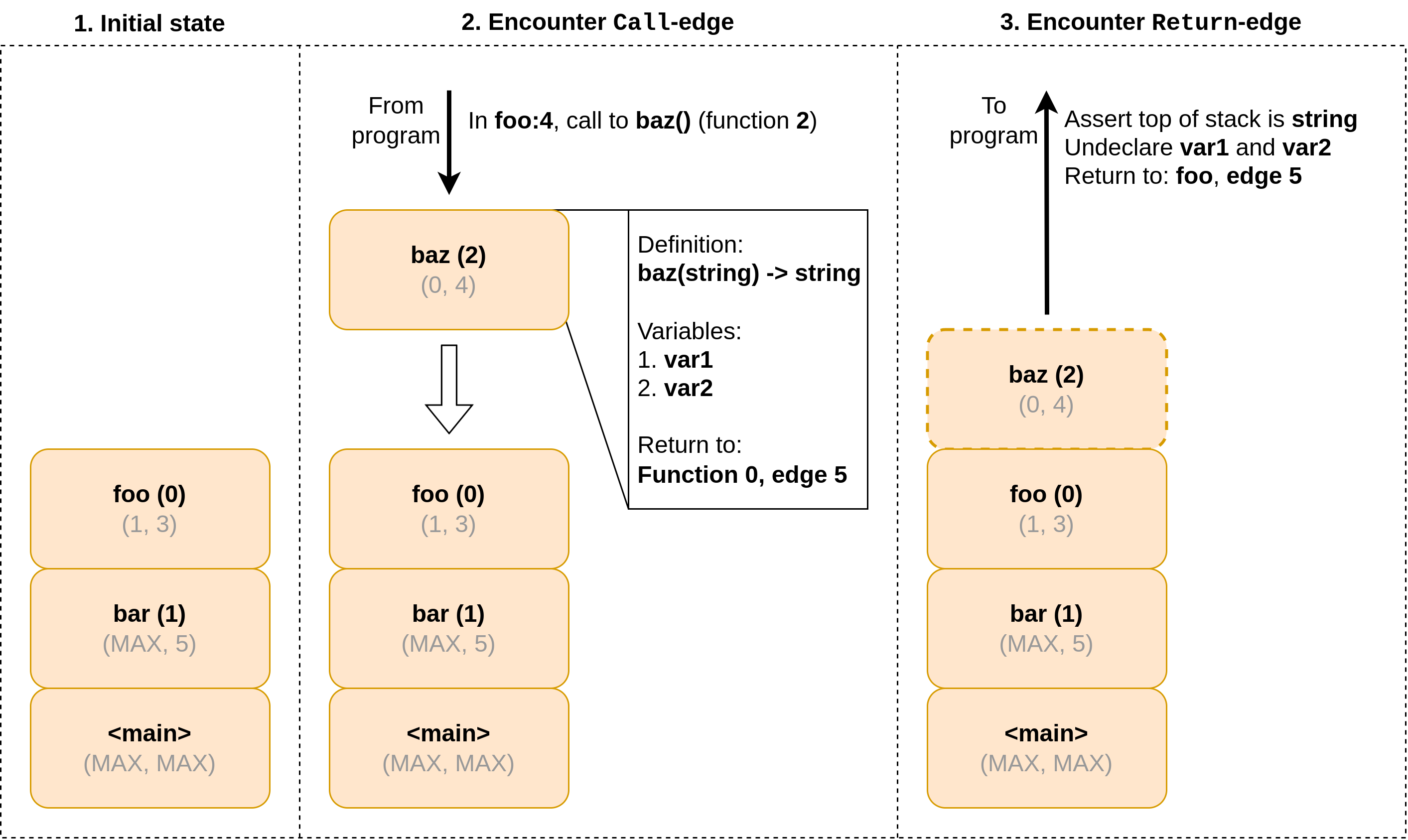

The frame stack is a FIFO-queue, like the expression stack. However, where the expression stack acts as a scratchpad memory for computations, the frame stack's utility lies with function calls, and keeps track of which calls have been made and -more importantly- where to return to when a Return-edge is encountered. Finally, the frame stack also keeps track of the function return type (if known) for an extra type check upon returning from a function.

Implementation

The frame stack is implemented as a queue of frames, which each contain information about the context of a function call. In particular, frames store three things:

- A reference to the function definition (in the workflow's definition table) of the called function;

- A list of variables (as references to variable definitions in the definition table) that are scoped to this function. When the function returns, these variables will be undeclared; and

- A pointer to the edge to execute after the function in question returns. Note that this points to the next edge to execute, i.e., the edge after the call itself.

Other than that, the frame stack pretty much acts as a stack for calls. A visualisation of this process is given in Figure 1.

Figure 1: Visualisation of the frame stack operations. Elements on the stack (frames) are pushed for every call and popped for every return. Frames describe the behaviour of return in terms of return type, variable undeclaration and which address to return to. Note the address returns to the edge after the call, not the call itself.

Typing scopes

In Brane versions 3.0.0 and earlier, the frame stack had the secondary goal of maintaining which type definitions (functions, tasks, classes and variables) were currently in scope. In particular, WIR-workflows were written with a per-function definition table, which added definitions local to that function only to the current scope.

In that context, the frame stack frames included the function's definition table, along with mechanisms for resolving the stack of tables into a coherent scope. As such, in the reference implementation's code, the frame stack also provides access to all definitions of the workflow to the rest of the VM.

However, this proved to be extremely complicated in practise, and is phased out in versions later than 3.0.0. Now, there is just a single table at the start of the workflow that contains all definitions occurring. For compatability reasons, the frame stack still contains a pointer to that table and provides access to it in the codebase.

Next

In the next chapter, all three separate VM components (expression stack, variable register and frame stack) are used to showcase an example execution for the VM. That will conclude this series of chapters.

Alternatively, you can also select another topic in the sidebar to the left.

Bringing it together

In the previous few chapters, various components of the VM have been discussed that together make up the VM's state. To showcase how this state is used, this section emulates the run of a VM to see how a particular program is processed and changes the state.

The workflow that we will execute can be examined here. A recommended way to read this chapter is to familiarize yourself with the workflow first (in any of the three representations), and then read the example below. You can open the workflow in a separate tab to refer to it, if you wish.

Example

The execution of the workflow is described in three parts: first, we will examine the initial state of the VM; then, we emulate one of the function calls in the workflow; after which we do the remainder of the workflow in more high-level and examine the result.

Initial state

The state of the VM is expressed in four parts:

- The program counter, which points to the next edge/instruction to execute;

- The expression stack;

- The variable register; and

- The frame stack.

Throughout the example, we will display the state of these components as they get updated. For example, this is the VM's state at start:

Program counter

// We give the program counter as a triplet of (<func>, <edge>, <instr_or_null>) // Remember that `MAX` typically refers to `<main>` // Points to the first instruction in the first Linear edge in `<main>` (MAX, 0, 0)Expression stack

// The expression stack is given as a list of values (empty for now) [ ]Variable register

// The variable register is given as a map of variable names to the contents // for that variable (empty for now) { }Frame stack

// The frame stack is given as a list of frames [ // Every frame is: function, variables (as name, type), return address { // Remember that `MAX` typically refers to `<main>` "func": MAX, // Lists all variables declared in this function's body "vars": [ ("zeroes", "IntermediateResult"), ("ones", "IntermediateResult"), ], // See 'Program counter', or null for when there is no return address "pc": null } ]

Most state is empty, except for the program counter, which points to the first edge in the first function; and the frame stack, which contains the root call made by the VM to initialize everything.

Function call

If we examine the workflow, the first edge we encounter is a Linear edge. This contains multiple instructions, and so the VM will execute these first.

Note that every step has an implicit update to the program counter at the end. For example, step 1.1 ends with:

Program counter

(MAX, 0, 1) // Note the bump in the instruction!

and step 1 as a whole ends with:

Program counter

(MAX, 1, 0) // Note the bump in the edge!

We will only note it when changes to typical bumping happen (e.g., function call).

-

Linear-edge: To process a linear edge, the instructions within are executed.-

.dec zeroes: The first instruction is to declare thezeroes-variable, as the start of the function (in this case,<main>). As such, this creates an entry for it in the variable register:Variable register

{ // The entry for the `zeroes`-variable. 4: { "name": "zeroes", "type": "IntermediateResult", "val": null } }(Refer to the workflow table in Listing 2 to map IDs to variables)

The variable is declared (part of the register) but not initialized (

valisnull). -

.int 3: Next, we begin by computing the argument to the first function calls, which is3 + 3. As such, the next instruction pushes a3integer constant to the expression stack. The stack looks as follows after executing it:Expression stack

[ { // The data type of this entry on the stack. "dtype": "int", // The integer value stored. "value": 3, } ] -

.int 3: Another integer constant is pushed (3again). The stack looks as follows after executing it:Expression stack

[ // The new entry (so we reversed the order of the list) { "dtype": "int", "value": 3, }, { "dtype": "int", "value": 3, } ] -

.add: We perform the addition to complete the expression3 + 3in the workflow. This pops the top two values, adds them, and pushes the result to the stack. Therefore, this is the expression stack after the addition has been performed:Expression stack

[ // We've just done `3 + 3` :) { "dtype": "int", "value": 6, } ] -

.func generate_dataset: With the arguments to the function call prepared on the stack, the next step is to push what we will be calling. As such, the top of the stack will be a pointer to the function that will be executed.Expression stack

[ { "dtype": "function", // Note that the function is represented by its identifier "def": 4, }, { "dtype": "int", "value": 6, } ]Now, we are ready for a function call. However, since this control flow deviation must be visible to checkers who want to validate the workflow, this is represented at the Edge-level. The next thing to execute is thus a next edge.

-

-

Call-edge: The Call will first pop the function pointer from the stack and resolves it to a function definition in the workflow's definition table. In this case, it will check function4and see that it'sgenerate_dataset. Based on its signature, it knows that the top of the stack must contains a value ofAnytype; and as such, it will assert this is the case. This means that the expression stack looks like:Expression stack

[ // Only the pointer is popped; the arguments are for the function to figure out { "dtype": "int", "value": 6, } ]Next, a new frame is pushed to the frame stack that allows us to handle any

Return-edges appropriately:Frame stack

[ { // We're calling function 4 (generate_dataset)... "func": 4, // ...which will declare the variables `n` "vars": [ ("n", "Any"), ], // ...and return to the next Edge after the call (the second Linear in `<main>`) "pc": (MAX, 2, 0) }, { "func": MAX, "vars": [ ("zeroes", "IntermediateResult"), ("ones", "IntermediateResult"), ], "pc": null } ]Once the expression stack and frame stack check out, the call is performed; this is done by changing the program counter to point to the called function:

Program counter

(4, 0, 0) // First Edge, first instruction in function 4 (generate_dataset)This means that the next edge executed is no longer the next one in the main function body, but rather the first one in the called function's body.

Function body

Next, the function body of the generate_dataset function (i.e., function 4) is executed by the VM.

-

Linear-edge:-

.dec n: The first step when the function body is entered is to map the arguments on the stack to named variables. To do that, the variable has to be declared first; and that's what happens here.

The variable register after declaration:Variable register

{ // The entry for the `n`-variable. 0: { "name": "n", "type": "Any", // Still uninitialized "val": null }, 4: { "name": "zeroes", "type": "IntermediateResult", "val": null } } -

.set n: With the variable declared, we can pop the top value off the expression stack and move it to the variable's slot in the variable register.

The expression stack is empty after performing the.set:Expression stack

[ ]and instead, the value is now assigned to

n:Variable register

{ // The entry for the `n`-variable. 0: { "name": "n", // Because it was `Any`, the type of `n` has been set in stone now "type": "int", "val": 6 }, 4: { "name": "zeroes", "type": "IntermediateResult", "val": null } } -

.get n: After storing the arguments, the function call needs to be prepared now (which is a call tozeroes, using a number and a string). A disadvantage of the push/pop model is that, even if the value was just on top of the stack, we removed it to move it to the variable register. Therefore, we need to add it again to use in the next function call:Expression stack

[ { "dtype": "int", "value": 6, } ]Note that the variable register is actually not edited, as

.gets perform copies. -

.cast Integer: Before moving to the next argument of the call, a cast is used by the compiler to have the VM assert the current value on the stack is convertible to the required integer. In addition, if the user uses a type trivially convertible to integers (e.g., booleans), then this cast steps is where this conversion happens.

However, as the value was already an integer, nothing changes and the VM continues. -

.str "vector": For the second argument to the function call, a string constant is used. This is pushed with this instruction.Expression stack

[ { "dtype": "string", "value": "vector", }, { "dtype": "int", "value": 6, } ]

-

-

Node-edge: Now it's time to perform the call tozeroes. However, sincezeroesis actually an external function instead of a BraneScript function, the VM cannot simply change program counters and continue execution. Instead, it will use its plugins to execute the node on whatever backend necessary.

To do so, first the arguments to the external function are popped from the expression stack:Expression stack

[ // They're being processed now ]The VM will then perform the call using the information supplied in the

Nodeand the values supplied by the function arguments. This process is described in TODO.

When the execution completes, the result is pushed onto the stack:Expression stack

[ // The result is a reference to a created dataset { "dtype": "IntermediateResult", // This ID is randomly generated by the compiler "value": "result_foo", } ]Then, execution of the graph continues as normal, as if the call returned immediately.

-

Return-edge: After the task has been processed, thegenerate_datasetfunction completes.

When a Return-edge is executed, it first pops the current frame from the frame stack to examine what to do next:Frame stack

[ // Only the main frame remains { "func": MAX, "vars": [ ("zeroes", "IntermediateResult"), ("ones", "IntermediateResult"), ], "pc": null } ]Based on this, the VM now first removes the variables from the variable register that were local to this function:

Variable register

{ // Variable `n` is gone now... 4: { "name": "zeroes", "type": "IntermediateResult", "val": null } }Then, based on the definition of the called function, the VM examines if a value needs to be returned. If so, the expression stack is verified to see if this is indeed what is on top of the stack; and in this case, it will see that this is so.

The VM is now ready to jump back to the next edge in<main>, and thus updates the program counter accordingly:Program counter

(MAX, 2, 0) // Points back into `<main>`, to the second `Linear`and then it executes the program from there onwards.

More calls

-

Linear-edge: In the next edge in<main>, the embedded instructions make two things happen:- The result of the call to

generate_datasetis stored inzeroes(.set zeroes); and - The arguments to the next call (

add_const_to(2, zeroes)) are being prepared. This is done by first pushing the constant2, and then extending that with the value currently inzeroes.

After everything is processed, the variable register and expression stack look like:

Variable register

{ // Zeroes has been given a value 4: { "name": "zeroes", "type": "IntermediateResult", "val": "result_foo" }, // `ones` has been declared but uninitialized 5: { "name": "ones", "type": "IntermediateResult", "val": null } }Expression stack

[ // The stack now contains the arguments (first at the bottom), then the function pointer to call { "dtype": "function", "value": 5 }, { "dtype": "IntermediateResult", "value": "result_foo" }, { "dtype": "int", "value": 2 }, ] - The result of the call to

-

Call-edge: Theadd_const_tofunction is called, exactly like the previous function. We won't repeat the full call here, but instead just continue after the call returned.Expression stack

[ // The reference to the next result is on the stack, produced by `add_const`. { "dtype": "IntermediateResult", "value": "result_bar" } ] -

Linear-edge: The final call is being prepared in this Edge. This simply relates to storing the result of the previous call, and then callingcat_dataon it.Variable register

{ 4: { "name": "zeroes", "type": "IntermediateResult", "val": "result_foo" }, // `ones` has been updated with the result of the `add_const_to` call 5: { "name": "ones", "type": "IntermediateResult", "val": "result_bar" } }Expression stack

[ // The function to call and then the argument to call it with { "dtype": "function", "value": 6 }, { "dtype": "IntermediateResult", "value": "result_bar" } ] -

Call-edge: The call tocat_datais processed as normal, except that a special call happens inside of it; after thecat-task has been processed, its result isprintlned, which is a builtin function. This function makes the VM pop a value, and then print that to the stdout (or something similar) appropriate to the environment the VM is being executed in using one of its plugins. This means that it's not a full call using program counter jumps, but instead processing that happens in the Call itself.

Sincecat_dataitself does not return, the final state change of the program is that the expression stack is now empty:Expression stack

[ // All values have been popped ]

Result

Stop-edge: When this edge is reached, the VM quits and cleans up. This latter part is done implicitly by unallocating all of its resources.

This concludes the execution of our workflow, which has produced the output of the dataset on stdout. Any intermediate results will now be destroyed, and the entity managing the VM can do other stuff again.

Next

That concludes the chapters on the VM!

The next step could be examining how tasks are being executed on the rest of the Brane system (see the TODO-chapter). Alternatively, you can also learn more about BraneScript by going to the Appendix. Or you can select any other topic in the sidebar on the left.

Bringing it together - Workflow example

This page contains the workflow used in the Bringing it together chapter.

Three representations are given: BraneScript, WIR (using a more readable syntax than JSON) and a visual graph representation.

BraneScript

The snippet in Listing 1 shows the example workflow described using BraneScript.

// See https://github.com/epi-project/brane-std

import cat; // Provides `cat()`

import data_init; // Provides `zeroes()`

import data_math; // Provides `add_const()`

// Function to generate a dataset that is a "vector" of `n` zeroes

func generate_dataset(n) {

return zeroes(n, "vector");

}

// Function to add some constant to a dataset

func add_const_to(val, data) {

return add_const(data, val, "vector");

}

// Function to print a dataset

func cat_data(data) {

println(cat(data, "data"));

}

// Run the script

let zeroes := generate_dataset(3 + 3);

let ones := add_const_to(2, zeroes);

cat_data(ones);

Listing 1: The example workflow for the Bringing it together chapter. It is denoted in BraneScript.

WIR

The snippet in Listing 2 shows the equivalent WIR to the workflow given in Listing 1.

Table {

Variables [

0: n (Any),

1: val (Any),

2: data (Any),

3: data (Any),

4: zeroes (IntermediateResult),

5: ones (IntermediateResult),

],

Functions [

0: print(String),

1: println(String),

2: len(...),

3: commit_result(...),

4: generate_dataset(Any) -> IntermediateResult,

5: add_const_to(Any, Any) -> IntermediateResult,

6: cat_data(Any),

],

Tasks [

0: cat<1.0.0>::cat_range_base64(...),

1: cat<1.0.0>::cat_range(...),

2: cat<1.0.0>::cat(IntermediateResult, String) -> String,

3: cat<1.0.0>::cat_base64(...),

4: data_init<1.0.0>::zeroes(Integer, String) -> IntermediateResult,

5: data_math<1.0.0>::add_const(IntermediateResult, Real, String) -> IntermediateResult,

],

Classes [

0: Data { name: String },

1: IntermediateResult { .. },

]

}

Workflow [

<main> [

Linear [

.dec zeroes

.int 3

.int 3

.add

.func generate_dataset

],

Call,

Linear [

.set zeroes

.dec ones

.int 2

.get zeroes

.func add_const_to

],

Call,

Linear [

.set ones

.get ones

.func cat_data

],

Call,

Stop

],

func 4 (generate_dataset) [

Linear [

.dec n

.set n

.get n

.cast Integer

.str "vector"

],

Node<4> (data_init<1.0.0>::zeroes),

Return

],

func 5 (add_const_to) [

Linear [

.dec data

.set data

.dec val

.set val

.get data

.cast IntermediateResult

.get val

.cast Real

.str "vector"

],

Node<5> (data_math<1.0.0>::add_const),

Return

],

func 6 (cat_data) [

Linear [

.dec data

.set data

.get data

.cast IntermediateResult

.str "data"

],

Node<2> (cat<1.0.0>::cat),

Linear [

.func println,

],

Call,

Return

],

]

Listing 2: A WIR-representation of the workflow given in Listing 1. This snippet uses freeform syntax to be more readable than the WIR's JSON.

Graph

Figure 1 shows the visual representation of the workflow given in Listing 1 and Listing 2.

Figure 1: Visual representation of the example workflow for the Bringing it together chapter. The workflow is described as a WIR-workflow, using WIR-graph elements and instructions.

Figure 1: Visual representation of the example workflow for the Bringing it together chapter. The workflow is described as a WIR-workflow, using WIR-graph elements and instructions.

Services

Brane is implemented as a collection of services, and in the next few chapters we will introduce and discuss every of them. As we do so, we also discuss algorithms and representation used in order to make sense of the services.

Central services

The services found in the central orchestrator are:

- brane-drv implements the driver and acts as both the entrypoint and execution engine to the rest of the framework.

- brane-plr implements the planner and is responsible for deducing missing information in the submitted workflow.

- brane-api implements the global audit log and provides global information to other services in the framework.

- brane-prx implements the proxy and fascilitates and polices inter-node communication.

The following services are placed on each domain:

- brane-job implements the worker and processes the incoming events from the driver.

- brane-reg implements the local audit log and provides local information to its

brane-joband the central registrybrane-api. It also implements partial worker functionality by fascilitating data transfers. - brane-prx implements the proxy and fascilitates and polices inter-node communication.

brane-drv: The driver

Arguably the most central to the framework is the brane-drv service. It implements the driver, the entrypoint and main engine behind the other services in the framework.

In a nutshell, the brane-drv-service waits for a user to submit a workflow (given in the Workflow Internal Representation (WIR)), prepares it for execution by sending it to brane-plr and subsequently executes it.

This chapter will focus on the latter function mostly, which is executing the workflow in WIR-format. For the part of the driver that does user interaction, see the specification; and for the interaction with brane-plr, see that service's chapter.

The driver as a VM

The reference implementation defines a generic Vm-trait that takes care of most of the execution of a workflow. Importantly, it leaves any operation that may require outside influence open for specific implementations;

This is done by following the procedure described in the next few subsections. First, we will discuss how the edges in a workflow graph are traversed and executed, after which we describe some implementation details of executing the instructions embedded within them. Then, finally, we will describe the procedure of executing a task from the driver's perspective.

Unspooling workflows

In the WIR specification, the workflow graph is defined as a series of modular "Edges" that compose the graph as a whole. Every such component can be thought of as having connectors, where it gets traversed from its incoming to one of its outgoing connectors. Which connector is taken, then, depends on the conditional branches embedded in the graph.

To execute the conditional branches, the driver defines a workflow-local stack on which values can be pushed to and popped from as the traversing of a workflow occurs.

The driver always starts at the first edge in the graph-part of the WIR, which represents the main body of the workflow to execute. Starting at its incoming connector, the edge is executed and the driver moves to the next one indicated by its outgoing connector. This process is dependent on which edge is taken:

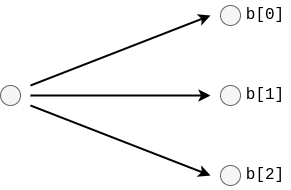

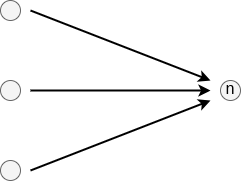

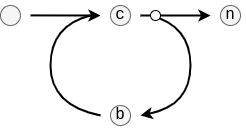

Linear: As the name suggests, this edge always progresses to a static, single next edge. However, attached to this edge may be any number ofEdgeInstructions that manipulate the stack (see below).Node: A linear edge as well, this edge always progresses to a static, single next edge. However, attached to this edge is a task call that must be executed before continuing (see below).Stop: An edge that has no outgoing connector. Whenever this one is traversed, the driver simply stops executing the workflow and completes the interaction with the user.Branch: An edge that optionally takes one of two chains of edges (i.e., it has two outgoing connectors). Which of the two is taken depends on the current state of the stack.Parallel: An edge that has multiple outgoing connectors, but where all of those are taken concurrently. This edge is matches with aJoin-edge that merges the chains back together to one connector.Join: A counterpart to theParallelthat joins the concurrent streams of edges back into one outgoing connector. Doing so, the join may combine results coming from each branch in hardcoded, but configurable, ways.Loop: An edge that represents a conditional loop. Specifically, it has three connectors: one that points to a stream of edges for preparing the stack to analyse the condition at the start of every iteration; one that represents the edges that are repeatedly taken; and then one that is taken when the loop stops.Call: An edge that emulates a function call. While it is represented like a linear edge, first, a secondary body of edges is taken depending on the function identifier annotated to this edge. The driver only resumes traversing the outgoing connector once the secondary body hits aReturnedge.Return: An edge that lets a secondary function body return to the place where it was called from. Like function returns, this may manipulate the stack to push back function results.

Executing instructions

A few auxillary systems have to be in place, besides the stack, that is necessary for executing instructions.

First, the driver implements a frame stack as a separate entity from the stack itself. This defines where the driver should return to after every successive Call-edge, as well as any variables that are defined in that function body (such that they may be undeclared upon a return). Further, the framestack is also used to manage shadowing variable-, function- or type-declarations, as the current implementation does not do any name mangling.

Next, the driver also implements a variable register. This is used to keep track of values with a different lifetime from typical stack values, and that need to be stored temporarily during execution. Essentially, the variable register is a table of variable identifiers to values, where variables can be added (VarDec), removed (VarUndec), assigned new values (VarSet) or asked to provide their stored values (VarGet).

brane-plr: The planner

This chapter will be written soon.

brane-api: The global audit log

This chapter will be written soon.

brane-job: The worker

This chapter will be written soon.

brane-reg: The local audit log

This chapter will be written soon.

brane-prx: The proxy

This chapter will be written soon.

Introduction

Workflow Internal Representation

The Brane framework is designed to accept and run workflows, which are graph-like structures describing a particular set of tasks and how information flows between them. The Workflow Internal Representation (WIR) is the common representation that Brane receives from all its various frontends.

In these few chapters, the WIR is introduced and defined. For the remainder of this chapter, we will give context to the WIR about why it exists. In next chapters, we will then state its specification.

Two levels of workflow

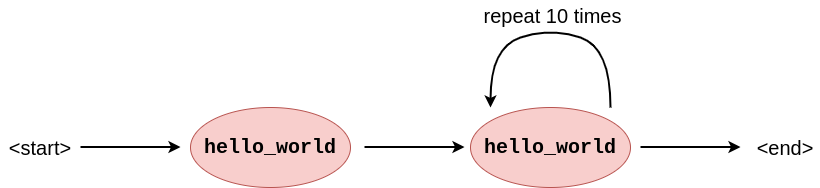

Typically, we think of a workflow as a graph-like structure that contains only essential information about how to execute the tasks. Figure 1 shows an example of such a graph.

Figure 1: An example workflow graph. The node indicate a particular function, where the arrows indicate how data flows through them (i.e., it specifies data dependencies). Some nodes (i in this example), may influence the control flow dynamically to introduce branches or loops.

This is a very useful representation for policy interpretation, because policy tends to concern itself about data and how it flows. The typical workflow graph lends itself well to this kind of analysis, since reasoners can simply traverse the graph and analyse which task is executed where to see which sites may see their data or new datasets computed based on their data.

However, for Brane, this representation is incomplete. Due to its need to support scripting-like workflow languages, workflows have non-trivial control flow evaluation; for example, Brane workflows are not Directed A-cyclic Graphs (DAGs), since they may encode iterations, and control flow may depend on arbitrarily complex expressions defined only in the workflow file.

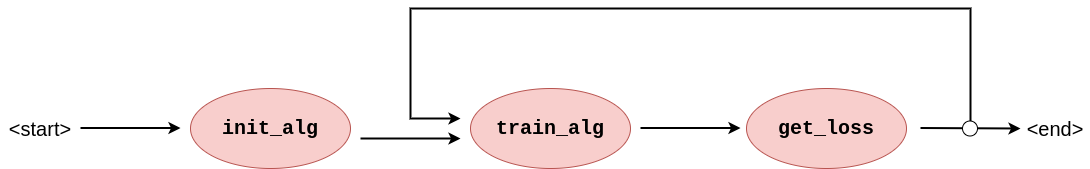

For example, if we take BraneScript as the workflow language, the user might express an intuitive workflow as seen in Listing 1. This workflow runs a particular task (train_alg()) conditionally, based on some loss that it returns. This is a typical example of a task that needs to run until it converges.

import ml_package;

let loss := 1.0;

let alg := init_alg();

while (loss < 1.0) {

alg := train_alg(alg);

loss := get_loss(alg);

}

Listing 1: Example snippet of BraneScript that shows a workflow with a conditional branch.

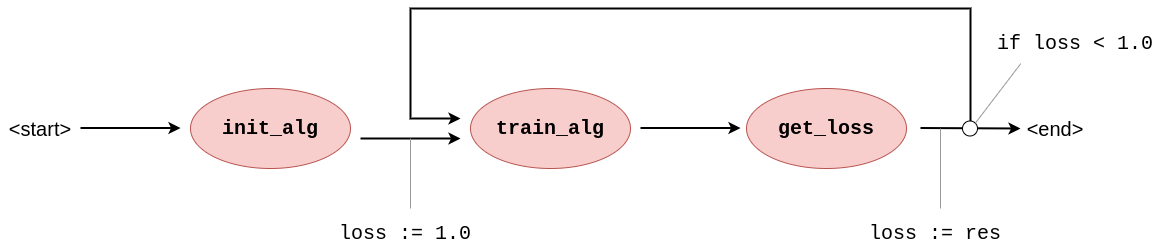

If we simply represent this as a graph of tasks with data-dependencies, the information on the condition part of the iteration gets lost: the graph does not tell us what to condition on or how to initialise the loss-variable (Figure 2). As such, the WIR has to represent also the control flow layer (Figure 3); and because this control flow can consist of multiple statements and arbitrarily complex expressions, this control flow layer needs describe a full layer of execution as well.

Figure 2: Graph representation of Listing 1 showing only the high-level aspects typically found in workflows. With only this information, the condition of the loop is non-obvious, as are its starting conditions.

Figure 3: Graph representation of Listing 1 including the control flow annotations on its edges. Now, the workflow contains information on how to check the condition and update it every iteration.

In Brane, the WIR thus balances the requirements of the two services that interact with it:

- The

brane-drv-service prefers to represent a workflow imperatively, with statements performing some work "in-driver" (i.e., control flow execution) and some work "in-task" (such that it can touch sensitive data). - The checker-service, on the other hand, needs to learn about data dependencies and how data moves from location to location.

WIR to the rescue

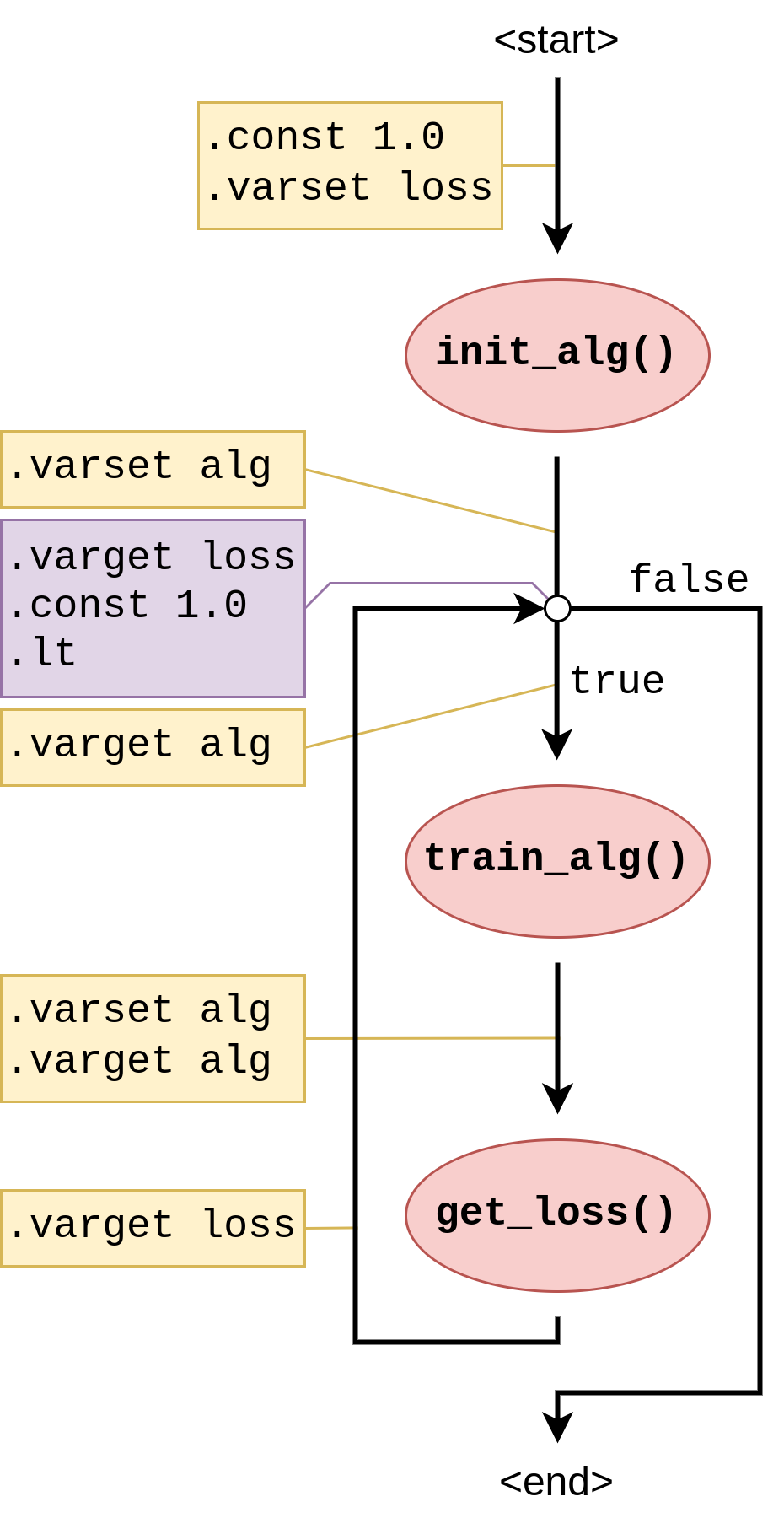

As a solution to embed the two levels of execution, the WIR is structured very much like the graph in Figure 3, except that the annotations are encoded as stack instructions that pop values from- or push values to a stack in the runtime. Figure 4 shows a graph with such instructions.

Figure 4: Graph representation of Listing 1 including WIR-instruction annotations on its edges. Now, an interpreter can follow the workflow's structure and execute the instructions as it goes, manipulating the stack as it goes until conditional control flow decisions are encountered that examine the current stack to make a decision.

At the toplevel, the WIR denotes a graph, where nodes represent task execution steps and edges represent transitions between them. To support parallel execution, branches, loops, etc, these edges can connect more than two nodes (or less, to represent start- or stop edges).

Then, these edges are annotated with edge instructions that are byte-like instructions encoding the control flow execution required to make conditional decisions. They manipulate a stack, and this stack then serves as input to either certain types of edges (e.g., a branch) or to tasks to allow them to portray conditional behaviour as well.

Functions

As an additional complexity, the WIR needs to have support for functions (since they are supported by BraneScript). Besides saving executable size by re-using code at different places in the execution, functions also allow for the use of specialised programming paradigms like recursion. Thus, the graph structure of the WIR needs to have a similar concept.

Functions in the WIR work similarly to functions in a procedural language. They can be thought of as snippets of the graph that are kept separately and can then be called using a special type of edges that transitions to the snippet graph first before continuing with its actual connection. This process is visualised in Figure 5.

Figure 5: Graphs showing a "graph call", which is how the WIR represents function call. The edge connecting task_f to task_i implements the call by first executing the snippet task_g -> task_h before continuing to its original destination.

To emulate the full statement function, the edge also transfers some of the stack elements in the calling graph to the stack frame of the snippet graph. This way, arguments can be passed from one part of the graph to another to change its control flow as expected.

Next

In the next chapter, we introduce the toplevel structure of the WIR and how it is generally encoded. Then, in the next two chapters, we introduce the schemas for the graph structure, which can be thought of as the "higher-level layer" in the WIR, and the instructions, which can be thought of as the "lower-level layer" in the WIR.

If you are instead interested in how to execute a WIR-workflow, consult the documentation on the brane-drv-service. Analysing a WIR is for now done in the brane-plr-service only.

Toplevel schema

In this chapter, we will discuss specification for the toplevel part of the WIR. Its sub-parts, the graph representation and edge instructions, are discussed in subsequent chapters.